The Intel 4004 chip is 45 years old. A bit of IT history

Without modern electronics, human life is already difficult to imagine. Of course, there are many places where modern technologies have not yet been heard of, let alone used. But still, the vast majority of the world's population is somehow connected with electronics, which has become an integral part of our life and work.

Since ancient times, man has used various devices in order to make some production processes more efficient or to make his own existence more comfortable. The real breakthrough happened in the late 40s of the 20th century, when transistors were invented. The first were bipolar transistors, still in use today. They were followed by MOS transistors (metal oxide semiconductor).

The first transistors of this type were more expensive and less reliable than their bipolar "relatives". But, starting in 1964, integrated circuits began to be used in electronics, the basis of which was precisely the MOS transistors. This subsequently allowed to reduce the cost of manufacturing electronic devices and significantly reduce the size of gadgets and systems while reducing power consumption. Over time, microcircuits became more and more complex and sophisticated, replacing large blocks of transistors, which opened up the possibility of reducing the size of electronic devices.

By the end of the 60s, microcircuits with a rather large number of logic gates (large for that time): 100 or more began to spread. This allowed the use of new elements to create computers. Developers of electronic computers relatively quickly recognized that increasing the density of transistors in a microcircuit will eventually make it possible to create a computer processor in the form of a single chip. Initially, integrated circuits with MOS transistors were used to create terminals, calculators, they began to be used by developers of on-board systems for passenger and military vehicles.

Key moment

Today, most electronics experts recognize that the start of a qualitatively new stage in the development of electronics began in 1971, when the 4-bit 4004 processor from Intel appeared, later replaced by the 8-bit 8008 chip. It appeared after a small Japanese company called Nippon Calculating Machine Ltd. (later Busicom Corp.) ordered a total of 12 chips from Intel. The company needed these chips for their calculators, and the logical design of the chips was developed by an employee of the customer company). At that time, a new set of chips was developed for each device that performed highly specialized functions.When fulfilling the order, Marshian Edward Hoff proposed to reduce the number of chips for the Japanese company's new device by introducing the use of a central processing unit. It was he, as conceived by the engineer, who was supposed to become a data processing center and perform arithmetic and logical functions. The processor was supposed to replace several microcircuits at once. The management of both companies approved this idea. In the fall of 1969, with the help of Stanley Mazor, Hoff proposed a new microcircuit architecture, the number of which was reduced to only 4. Some of the proposed elements are a 4-bit central processing unit, ROM and RAM.

The processor itself was able to be designed by Federico Fagin, an Italian physicist who became the chief designer of the MCS-4 family at Intel. It was he who, thanks to the knowledge of MOS technology, was able to create a processor by implementing Hoff's idea. By the way, the world's first commercial microcircuit, which used silicon gate technology, was also developed by him. She was called Fairchild 3708.

Fagin, being an employee of Intel, was able to create a new method for designing arbitrary logic systems. He was assisted in his work by Masatoshi Shima, who was an engineer at Busicom at the time. Fagin and Sima subsequently developed the Zilog Z80 microprocessor, which, by the way, is still being produced today.

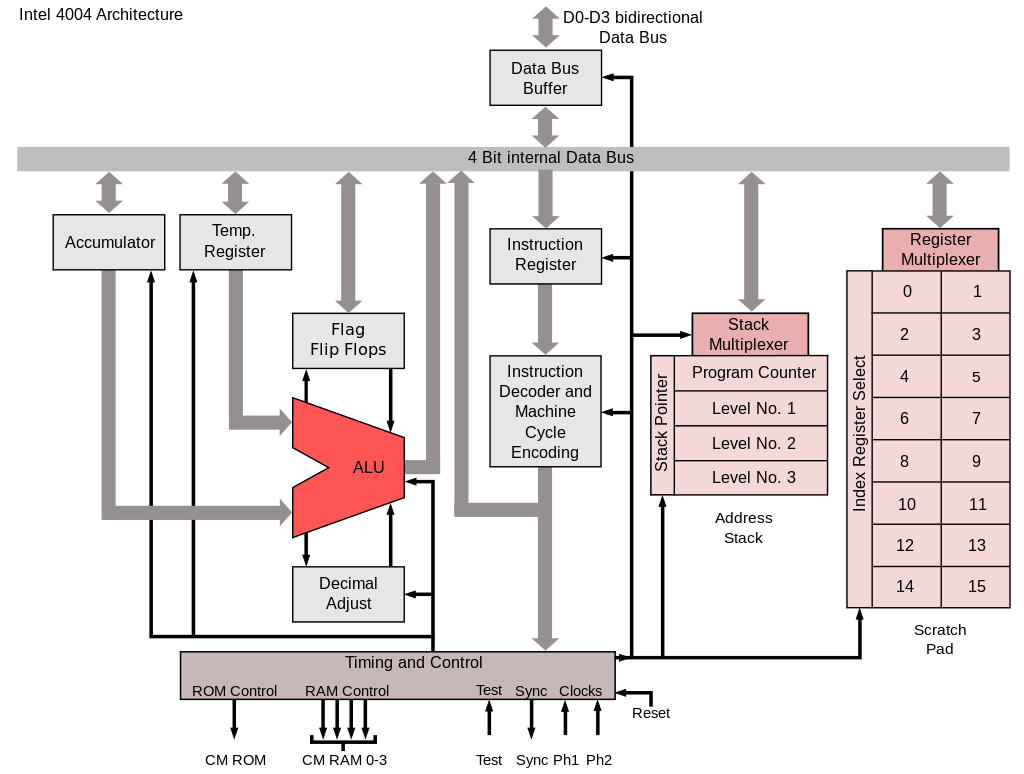

Intel 4004 processor architecture

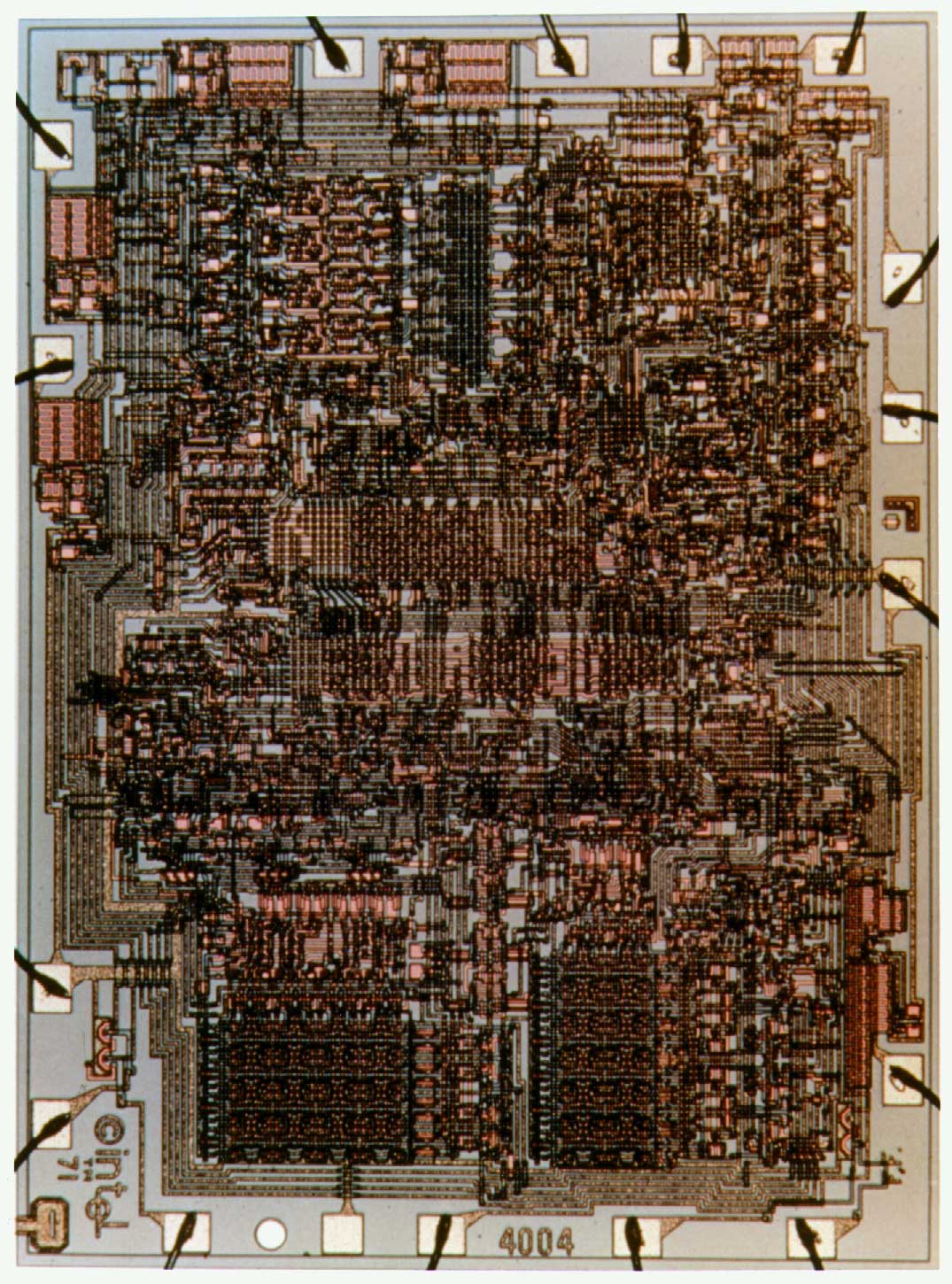

But the main thing happened on November 15, 1971. This is the date of the introduction of the first microprocessor from Intel, the 4004 chip. Its cost at that time was $200. In just one chip, almost all the functions of the mainframe processor were implemented. It was announced in November 1971 in Electronic News magazine.

Processor Specifications:

- Date of appearance: November 15, 1971

- Number of transistors: 2300

- Crystal area: 12mm²

- Manufacturing process: 10 µm (P-channel silicon pie MOS technology)

- Clock frequency: 740 kHz (specifically from 500 to 740.740 ... kHz, since the clock period is 2..1.35 µs (or 92.6 kHz?)

- Register width: 4 bits

- Number of registers: 16 (16 four bits can be used as 8 eight bits)

- Number of ports: 16 4-bit input and 16 4-bit output

- Data bus width: 4 bits

- Address bus width: 12 bits

- Harvard architecture

- Stack: internal 3-level

- Instruction memory (ROM/ROM): 4 kilobytes (32768 bits)

- Addressable memory (RAM/RAM): 640 bytes (5120 bits)

- Number of instructions: 46 (of which 41 are 8-bit and 5 are 16-bit)

- Instruction cycle: 10.8 microseconds

- Supply voltage: -15 V (pMOS)

- Working temperature: from 0 to +70C

- Storage and operation conditions: from -40 to +85C

- Connector: DIP16 (the microcircuit was directly soldered into the printed circuit board or installed in a special slot)

- Housing: 16-pin DIP (1 type of plastic or 3 types of ceramic, such as C4004 (white ceramic with gray stripes), C4004 (white ceramic), D4004 (black and gray ceramic), P4004 (black plastic))

- Delivery type: separately and in MCS-4 kits (ROM, RAM, I/O, CPU)

The 4004 processor did not become very popular. The 8080th chip, which can be called the "great-grandson" of the 4004th, began to be used everywhere.

Calculators and computers

In 1971, Intel had competitors. For example, Mostek, a company that developed semiconductors and devices based on them, created the world's first "calculator on a chip", MK6010.In June 1971, Texas Instruments launched a media campaign highlighting the benefits of its processor. At the time, the Datapoint 2200 based on the TMX 1795 was described as "a powerful computer that outperforms the original", meaning that the capabilities of the Datapoint 2200 based on the TMX 1795 were far superior to those of the Datapoint 2200 based on bipolar transistors. But STS, after checking the operation of the new chip, rejected it, continuing to use bipolar chips. Intel was still working on its own processor.

After some time, TI, convinced that there was no demand for the TMX 1795 (later - TMC 1795), completed the media campaign and stopped production of the system. But it was this chip that went down in history as the first 8-bit processor.

In 1971, CTC lost interest in a single processor for its systems, transferring all rights to a new chip to Intel. The company did not refuse this opportunity, and continued to develop the 8008 chip, successfully offering it to a number of other companies. In April 1972, she was able to deliver hundreds of thousands of these processors. Two years later, the 8008 processor was replaced by the new 8080, after which the 8086 came and the era of x86 systems began. Now, working on a powerful PC or laptop, it is worth remembering that the architecture of such a system was developed many years ago for the Datapoint 2200 programmable terminal.

Intel then used more advanced technology, which provided the advantage of its processors. They were fast and relatively economical in terms of energy consumption. Plus, the density of transistors in Intel chips was higher than in the TI chip, which made it possible to reduce the size of processors. In addition, marketing also played an important role, in this area Intel also made a number of successful steps, which ensured the popularity of the company's developments.

Be that as it may, the situation with the superiority in the development of the first processors is far from being as unambiguous as it is commonly believed. There were several pioneers here at once, but later the development of only one of them became popular. Actually, with the modernized "descendants" of this technology, we all deal today, in the 21st century.

Tags: Add tags