Conceptual data model of corporate storage. The enterprise data model includes

The purpose of the lecture

After studying the material of this lecture, you will know:

- what's happened enterprise data model ;

- how to convert enterprise data model into the data warehouse model;

- essential elements enterprise data model ;

- presentation layers of the corporate data model ;

- algorithm for converting an enterprise data model into a multidimensional data warehouse model ;

and learn:

- develop data warehouse models based on enterprise data model organizations;

- develop a star schema using CASE tools;

- partition tables multidimensional model using CASE tools.

Enterprise data model

Introduction

The core of any data warehouse is its data model. Without a data model, it will be very difficult to organize data in a data warehouse. Therefore, DW developers must spend time and effort developing such a model. The development of the HD model falls on the shoulders of the CD designer.

Compared with the design of OLTP systems, the methodology for designing a data warehouse has a number of distinctive features related to the orientation of storage data structures towards solving problems of analysis and information support decision-making process. The data model of the data warehouse should provide an effective solution of these problems.

The starting point in the design of a data warehouse can be the so-called enterprise data model(corporate data model or enterprise data model, EDM), which is created in the process of designing an organization's OLTP systems. When designing enterprise data model usually an attempt is made to create, on the basis of business operations, such a data structure that would collect and synthesize all the information needs of the organization.

In this way, enterprise data model contains the necessary information to build a DW model. Therefore, at the first stage, if such a model exists in the organization, a data warehouse designer can start designing a data warehouse by solving a transformation problem enterprise data model in model HD.

Enterprise data model

How to solve the conversion problem enterprise data model in the HD model? To solve this problem, you need to have this model, i.e. enterprise data model should be built and documented. And you need to understand what from this model and how should be transformed into a HD model.

Let us clarify the concept enterprise data model. Under corporate data model understand the multi-level, structured description of the subject areas of the organization, data structures of subject areas, business processes and business procedures, data flows adopted in the organization, state diagrams, data-process matrices and other model representations that are used in the activities of the organization. Thus, in a broad sense, enterprise data model is a set of models of various levels that characterize (model at some abstract level) the activities of the organization, i.e. content corporate model directly depends on what model structures were included in it in a given organization.

Main elements enterprise data model are:

- description of the subject areas of the organization (definition of areas of activity);

- relationships between the subject areas defined above;

- information data model (ERD-model or entity-relationship model);

- for each subject area description:

- entity keys;

- entity attributes;

- subtypes and supertypes;

- relationships between entities;

- attribute groupings;

- relationships between subject areas;

- functional model or business process model;

- data flow diagrams;

- state diagrams;

- other models.

In this way, enterprise data model contains entities, attributes, and relationships that represent the information needs of the organization. On fig. 16.1 shows the main elements enterprise data model.

Presentation layers of the enterprise data model

Enterprise data model is subdivided according to subject areas, which represent groups of entities related to supporting specific business needs. Some subject areas may cover specific business functions such as contract management, while others may group entities that describe products or services.

Each logical model must correspond to an existing subject area enterprise data model. If the logical model does not meet this requirement, a model that defines the subject area must be added to it.

Enterprise data model usually has several levels of presentation. Actually high level(high level) enterprise data model is a description of the main subject areas of the organization and their relationships at the level of entities. On fig. 16.2 is a fragment enterprise data model top level.

Rice. 16.2.

The diagram shown in the figure shows four subject areas: "Customer" ( customer), "Check" ( account), "Order" ( order) and "Product" ( Product). Typically, at the top level of the model view, only direct connections between subject areas, which, for example, fix the following fact: the buyer pays the invoice for the order of goods. Detailed information and indirect relationships at this level corporate model are not given.

On the next middle level(mid level) enterprise data model shown detailed information about domain objects, i.e. keys and entity attributes, their relationships, subtypes and supertypes, etc. For each domain of the top-level model, there is one middle-level model. On fig. 16.3 depicts the middle level of presentation corporate model for a fragment of the subject area "Order".

From fig. 16.3 it can be seen that the subject area "Order" ( order) includes several entities, defined through their attributes, and the relationships between them. The presented model allows you to answer questions such as the date of the order, who made the order, who sent the order, who receives the order, and a number of others. From the above diagram, it can be seen that in this organization there are two types of orders - orders for an advertising campaign ( Commercial) and retail orders ( Retail).

notice, that enterprise data model can represent various aspects of the organization's activities and with varying degrees of detail and completeness. If corporate model represents all aspects of the organization, it is also called organization data model(enterprise data model).

From the point of view of designing a data warehouse, an important factor in deciding to create a data warehouse model from enterprise data model is the state completeness enterprise data model.

Enterprise data model organization has the characteristic of evolution, i.e. it is constantly evolving and improving. Some subject areas enterprise data model may be well developed, for some the work may not yet have begun. If a fragment of the subject area is not worked out in enterprise data model, then there is no way to use this model as a starting point for designing a data warehouse.

Degree of completion corporate model can be leveled in the design of HD as follows. Since the development process of a data warehouse is usually divided into a sequence of stages in time, the process of its design can be synchronized with completion process development of individual fragments enterprise data model organizations.

At the lowest presentation layer of the corporate data model displays information about the physical characteristics of the database objects corresponding to logical data model middle presentation layer of the enterprise data model.

Increasingly, IT professionals are turning their attention to data management solutions based on industry standard data models and business decision templates. Ready-to-load complex physical data models and business intelligence reports for specific areas of activity allow you to unify the information component of the enterprise and significantly speed up business processes. Solution templates allow service providers to take advantage of non-standard information hidden in existing systems, thereby reducing project timelines, costs and risks. For example, real projects show that data model and business decision templates can reduce development effort by 50%.

An industry logical model is a domain-specific, integrated and logically structured view of all the information that must be in a corporate data warehouse to answer both strategic and tactical business questions. The main purpose of models is to facilitate orientation in the data space and help in highlighting the details that are important for business development. In today's business environment, it is absolutely essential to have a clear understanding of the relationships between the various components and a good understanding of the big picture of the organization. Identification of all the details and relationships using models allows the most efficient use of time and tools for organizing the work of the company.

Data models are abstract models that describe how data is represented and accessed. Data models define data elements and relationships between them in a given area. A data model is a navigational tool for both business and IT professionals that uses a specific set of symbols and words to accurately explain a specific class of real information. This improves communication within the organization and thus creates a more flexible and stable application environment.

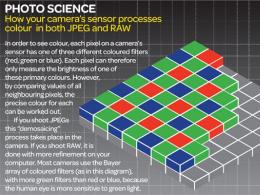

An example of a GIS for authorities and local self-government model.

Today, it is strategically important for software and service providers to be able to quickly respond to changes in the industry associated with technological innovations, the removal of government restrictions and the complexity of supply chains. Along with changes in the business model, the complexity and cost of information technology necessary to support the activities of the company is growing. Data management is particularly difficult in an environment where corporate Information Systems, as well as functional and business requirements for them are constantly changing.

To help facilitate and optimize this process, in translating the IT approach to the modern level, industry data models are called upon.

Industry data models from the companyEsri

Data models for the Esri ArcGIS platform are working templates for use in GIS projects and creating data structures for various application areas. Building a data model involves creating a conceptual design, logical structure, and physical structure that can then be used to build a personal or corporate geodatabase. ArcGIS provides tools for creating and managing a database schema, and data model templates are used to quick start GIS project for various applications and industries. Esri, along with the user community, has spent a significant amount of time developing a number of templates that can help you quickly start designing an enterprise geodatabase. These projects are described and documented at support.esri.com/datamodels . Below, in the order in which they appear on this site, are the semantic translations of Esri's industry model names:

- Address register

- Agriculture

- Meteorology

- Basic Spatial Data

- Biodiversity

- Inner space of buildings

- Greenhouse gas accounting

- Maintenance of administrative boundaries

- Military establishment. Intelligence service

- Energy (including the new ArcGIS MultiSpeak protocol)

- Ecological buildings

- Ministry of Emergency Situations. fire protection

- Forest cadastre

- Forestry

- Geology

- National level GIS (e-gov)

- Groundwater and waste water

- healthcare

- Archeology and protection of memorial sites

- National security

- Hydrology

- International Hydrographic Organization (IHO). S-57 format for ENC

- Irrigation

- Land Registry

- municipal government

- Maritime navigation

- State cadastre

- Oil and gas structures

- Pipelines

- Raster stores

- Bathymetry, seabed topography

- Telecommunications

- Transport

- Plumbing, sewerage, utilities

These models contain all the necessary features of the industry standard, namely:

- are freely available;

- are not tied to the technology of the "selected" manufacturer;

- created as a result of the implementation of real projects;

- created with the participation of industry experts;

- designed to provide information interaction between various products and technologies;

- do not contradict other standards and regulatory documents;

- used in implemented projects around the world;

- are designed to work with information throughout the life cycle of the system being created, and not the project itself;

- expandable to the needs of the customer without losing compatibility with other projects and / or models;

- accompanied by additional materials and examples;

- used in guidelines and technical materials of various industrial companies;

- a large community of participants, while access to the community is open to everyone;

- a large number of references to data models in publications in recent years.

Esri is part of an expert group of independent bodies that recommend various industry models for use, such as PODS (Pipeline Open Data Standards - an open standard for the oil and gas industry; there is currently an implementation of PODS as an Esri PODS Esri Spatial 5.1.1 geodatabase) or a geodatabase (GDB) from ArcGIS for Aviation that takes into account ICAO and FAA recommendations, as well as the AIXM 5.0 navigation data exchange standard. In addition, there are recommended models that strictly adhere to existing industry standards, such as S-57 and ArcGIS for Maritime (marine and coastal features), as well as models created from the work of Esri Professional Services and are "de facto" standards in the relevant areas. For example, GIS for the Nation and Local Government have influenced the NSDI and INSPIRE standards, while Hydro and Groundwater (hydrology and groundwater) are actively used in the freely available professional package ArcHydro and commercial products third firms. It should be noted that Esri also supports "de facto" standards such as NHDI. All proposed data models are documented and ready for use in enterprise IT processes. Accompanying materials for the models include:

- UML diagrams of entity relationships;

- data structures, domains, directories;

- ready-made geodatabase templates in ArcGIS GDB format;

- sample data and sample applications;

- examples of data loading scripts, examples of analysis utilities;

- reference books on the proposed data structure.

Esri summarizes its experience in building industry models in the form of books and localizes published materials. Esri CIS has localized and published the following books:

- Geospatial Service Oriented Architecture (SOA);

- Designing geodatabases for transport;

- Corporate geoinformation systems;

- GIS: new energy of electric and gas enterprises;

- Oil and gas digital map;

- Modeling our world. Esri Geodatabase Design Guide;

- Thinking about GIS. GIS planning: a guide for managers;

- Geographic information systems. Basics;

- GIS for administrative and economic management;

- Web GIS. Principles and application;

- Systems Design Strategies, 26th edition;

- 68 issues of the ArcReview magazine with publications by companies and users of GIS systems;

- ... and many other thematic notes and publications.

For example, the book Modeling our world..."(translation) is a comprehensive guide and reference guide to GIS data modeling in general, and the geodatabase data model in particular. The book shows how to make the right data modeling decisions, decisions that are involved in every aspect of a GIS project: from database design data and data collection to spatial analysis and visualization Describes in detail how to design a geographic database appropriate for the project, set up database functionality without programming, manage workflow in complex projects, model a variety of network structures, such as river, transport or Electricity of the net, integrate satellite imagery data into geographic analysis and mapping, and create 3D GIS data models. Book " Designing geodatabases for transportation" contains methodological approaches that have been tested on a large number of projects and fully comply with the legislative requirements of Europe and the United States, as well as international standards. And in the book " GIS: new energy of electric and gas enterprises Using real-world examples, it shows the benefits that an enterprise GIS can bring to an energy supplier, including aspects such as customer service, network operation, and other business processes.

Some of the books, translated and original, published in Russian by Esri CIS and DATA+. They cover both conceptual issues related to GIS technology and many applied aspects of modeling and deploying GIS of various scales and purposes.

We will consider the use of industry models using the BISDM (Building Interior Space Data Model) version 3.0 data model as an example. BISDM is a development of a more general BIM model (Building Information Model, building information model) and is intended for use in the design, construction, operation and decommissioning of buildings and structures. Used in GIS software, allows you to effectively exchange geodata with other platforms and interact with them. Refers to the general task group FM (organization infrastructure management). We list the main advantages of the BISDM model, the use of which allows:

- organize the exchange of information in a heterogeneous environment according to uniform rules;

- get a "physical" embodiment of the BIM concept and the recommended rules for managing a construction project;

- maintain a single repository using GIS tools throughout the entire life cycle of the building (from design to decommissioning);

- coordinate the work of various specialists in the project;

- visualize the planned schedule and construction stages for all participants;

- give a preliminary estimate of the cost and construction time (4D and 5D data);

- control the progress of the project;

- ensure the quality operation of the building, including maintenance and repairs;

- become part of the asset management system, including the functions of analyzing the efficiency of space use (renting, storage facilities, employee management);

- calculate and manage the energy efficiency of the building;

- simulate the movement of human flows.

BISDM defines the rules for working with spatial data at the level of internal premises in a building, including the purpose and types of use, laid communications, installed equipment, accounting for repairs and maintenance, logging incidents, relationships with other company assets. The model helps to create a unified repository of geographic and non-geographic data. The experience of the world's leading companies was used to isolate entities and model at the GDB level (geodatabase) the spatial and logical relationships of all physical elements that form both the building itself and its interior. Following the principles of BISDM allows you to significantly simplify the tasks of integration with other systems. At the first stage, this is usually integration with CAD. Then, during the operation of the building, data exchange with ERP and EAM systems (SAP, TRIRIGA, Maximo, etc.) is used.

Visualization of BISDM structural elements using ArcGIS.

In the case of using BISDM, the customer / owner of the facility receives an end-to-end exchange of information from the idea of creating a facility to the development of a complete project, construction control with obtaining up-to-date information by the time the facility is put into operation, control of parameters during operation, and even during reconstruction or decommissioning of the facility. Following the BISDM paradigm, the GIS and the GDB created with its help become a common data repository for related systems. Often in the GDB there are data created and operated by third-party systems. This must be taken into account when designing the architecture of the system being created.

At a certain stage, the accumulated "critical mass" of information allows you to move to a new qualitative level. For example, upon completion of the design phase of a new building, it is possible to automatically visualize 3D survey models in GIS, compile a list of equipment to be installed, calculate the kilometers of engineering networks to be laid, perform a number of verifications, and even give a preliminary financial estimate of the project cost.

Once again, when using BISDM and ArcGIS together, it becomes possible to automatically build 3D models from the accumulated data, since the GDB contains a complete description of the object, including z-coordinates, belonging to a floor, types of element connections, equipment installation methods, material, available paths personnel movements, functional purpose of each element, etc. etc. It should be noted that after the initial import of all design materials into the BISDM GDB, there is a need for additional content for:

- placement of 3D models of objects and equipment at designated places;

- collecting information about the cost of materials and the procedure for their laying and installation;

- patency control according to the dimensions of the installed non-standard equipment.

Through the use of ArcGIS, the import of additional 3D objects and reference books from external sources is simplified. The ArcGIS Data Interoperability module allows you to create procedures for importing such data and placing it correctly within the model. All formats used in the industry are supported, including IFC, AutoCAD Revit, Bentlye Microstation.

Industry data models from IBM

IBM provides a set of storage management tools and models for a variety of industries:

- IBM Banking and Financial Markets Data Warehouse (finance)

- IBM Banking Data Warehouse

- IBM Banking Process and Service Models

- IBM Health Plan Data Model (health)

- IBM Insurance Information Warehouse (insurance)

- IBM Insurance Process and Service Models

- IBM Retail Data Warehouse (retail)

- IBM Telecommunications Data Warehouse (telecommunications)

- InfoSphere Warehouse Pack:

- for Customer Insight (to understand customers)

- for Market and Campaign Insight (to understand the company and the market)

- for Supply Chain Insight (for understanding suppliers).

For example, model IBMbankingandFinancialmarketsDataWarehouse designed to address the specific challenges of the banking industry in terms of data, and IBMbankingprocessandServiceModels- in terms of processes and SOA (service-oriented architecture). Models presented for the telecommunications industry IBMInformationFrameWork(IFW) and IBMTelecommunicationsDataWarehouse (TDW). They help to significantly speed up the process of creating analytical systems, as well as reduce the risks associated with the development of business intelligence applications, corporate data management and organization of data warehouses, taking into account the specifics of the telecommunications industry. IBM TDW capabilities cover the entire spectrum of the telecommunications market - from Internet providers and cable network operators offering wired and wireless telephony services, data transmission and multimedia content, to multinational companies providing telephone, satellite, long-distance and international communication services, as well as organizations global networks. Today, TDW is used by large and small wireline and wireless communication Worldwide.

The tool called InfoSphere Warehouse Pack for Customer Insight is a structured and easily implemented business content for a growing number of business projects and industries, including banking, insurance, finance, health insurance programs, telecommunications, retail and distribution. For business users InfoSphere Warehouse Pack for Market and Campaign Insight helps you maximize the effectiveness of your market intelligence and marketing campaigns through a step-by-step development and business-specific process. Via InfoSphere Warehouse Pack for Supply Chain Insight organizations have the ability to obtain current information on supply chain operations.

Esri's position within the IBM solution architecture.

Of particular note is IBM's approach to utilities and utility companies. To meet growing customer demands, utility companies need a more flexible architecture than they use today, as well as an industry-standard object model to facilitate the free exchange of information. This will improve the communication capabilities of energy companies, enabling them to communicate more cost-effectively, and give the new systems better visibility into all required resources, no matter where they are located within the organization. The basis for this approach is SOA (Service Oriented Architecture), a component model that establishes a correspondence between the functions of departments and services of various applications that can be reused. The "services" of these components communicate through interfaces without hard binding, hiding from the user the full complexity of the systems behind them. In this mode, enterprises can easily add new applications regardless of the software vendor, operating system, programming language, or other internal characteristics of the software. The concept is implemented on the basis of SOA SAFE ( Solution Architecture for Energy, it enables the utility industry to gain a standards-based, holistic view of their infrastructure.

Esri ArcGIS® is a globally recognized software platform for geoinformation systems(GIS), which ensures the creation and management of digital assets of electric power, gas transmission, distribution, and telecommunications networks. ArcGIS allows you to conduct the most complete inventory of the components of the electrical distribution network, taking into account their spatial location. ArcGIS significantly extends the IBM SAFE architecture by providing the tools, applications, workflows, analytics, and information and integration capabilities needed to manage the smart grid. ArcGIS within IBM SAFE allows you to obtain information from various sources about infrastructure objects, assets, customers and employees with accurate data about their location, as well as create, store and process geo-referenced information about enterprise assets (pillars, pipelines, wires, transformers, cable ducts etc.). ArcGIS within a SAFE infrastructure allows you to dynamically combine key business applications by combining data from GIS, SCADA, and customer service systems with external information such as traffic, weather conditions, or satellite imagery. Utilities use this combined information for a variety of purposes, from C.O.R. (big picture of the operational environment) to site inspections, maintenance, network analysis and planning.

The information components of a power supply enterprise can be modeled using several levels, which range from the lowest - physical - to the top, most complex level of business process logic. These layers can be integrated to meet typical industry requirements such as automated measurement logging and supervisory control and data acquisition (SCADA) control. By building the SAFE architecture, utility companies are making significant strides in advancing an industry-wide open object model called the Common Information Model (CIM) for Energy and Utilities. This model provides the necessary basis for moving many enterprises towards a service-oriented architecture, as it encourages the use of open standards for structuring data and objects. By having all systems use the same objects, the confusion and inelasticity associated with different implementations of the same objects will be reduced to a minimum. Thus, the definition of the “customer” object and other important business objects will be unified in all systems of the power supply company. With CIM, service providers and service consumers can now share a common data structure, making it easier to outsource costly business components as CIM establishes a common base on which to build information sharing.

Conclusion

Comprehensive industry data models provide companies with a single, integrated view of their business information. Many companies find it difficult to integrate their data, although this is a prerequisite for most enterprise-wide projects. According to a study by The Data Warehousing Institute (TDWI), more than 69% of organizations surveyed found integration to be a significant barrier to new application adoption. On the contrary, the implementation of data integration brings the company tangible income and increased efficiency.

A well-built model uniquely defines the meaning of the data, which in this case is structured data (as opposed to unstructured data such as an image, binary file, or text, where the value can be ambiguous). The most effective industry models are offered by professional vendors, including Esri and IBM. The high returns from using their models are achieved due to their significant level of detail and accuracy. They usually contain many data attributes. In addition, experts from Esri and IBM not only have extensive modeling experience, but are also well versed in building models for a particular industry.

Zaitsev S.L., Ph.D.

Repeating groups

Repeating groups are attributes for which a single entity instance can have more than one value. For example, a person may have more than one skill. If, in terms of business requirements, we need to know the skill level for everyone, and each person can only have two skills, we can create the entity shown in Fig. 1.6. Here is the entity A PERSON with two attributes to store skills and skill levels for each.

Rice. 1.6. This example uses repeating groups.

The problem with repeating groups is that we can't know exactly how many skills a person might have. In real life, some people have one skill, some have several, and some have none yet. Figure 1.7 shows the model reduced to first normal form. Notice the added Skill ID , which uniquely defines each SKILL.

Rice. 1.7. Model reduced to first normal form.

One fact in one place

If the same attribute is present in more than one entity and is not a foreign key, then that attribute is considered redundant. The logical model should not contain redundant data.

Redundancy requires additional space, but while memory efficiency is important, the real problem lies elsewhere. Guaranteed synchronization of redundant data comes with an overhead, and you always run the risk of conflicting values.

In the previous example SKILL depends on Person ID and from Skill ID. This means that you will not have SKILL until it appears A PERSON, having this skill. It also makes it harder to change the Skill Name. You need to find each Skill Name entry and change it for each Person who owns that skill.

Figure 1.8 shows the model in second normal form. Note that the entity has been added SKILL, and attribute TITLE skill transferred to this entity. The skill level remained, respectively, at the intersection PERSONS and SKILLS.

Rice. 1.8. In second normal form, the repeating group is moved to another entity. This provides the flexibility to add as many Skills as needed and change the Skill Name or Skill Description in one place.

Each attribute depends on a key

Each attribute of an entity must depend on the primary key of that entity. In the previous example School name and Geographic area present in the table A PERSON but do not describe a person. To achieve the third normal form, you need to move the attributes to the entity, where they will depend on the key. Figure 1.9. shows the model in third normal form.

Rice. 1.9. In third normal form School name and Geographic region moved to the entity, where their values depend on the key.

Rice. 1.9. In third normal form School name and Geographic region moved to the entity, where their values depend on the key.

Many-to-Many Relationships

Relationship many-to-many reflect the reality of the environment. Note that in Figure 1.9 there is a many-to-many relationship between PERSON and SCHOOL. The ratio accurately reflects the fact that A PERSON can study in many SCHOOLS and in SCHOOL can learn a lot PERSON. To achieve fourth normal form, an associative entity is created that eliminates the monogie-to-many relationship by generating a separate entry for each unique combination of school and person. Figure 1.10 shows the model in fourth normal form.

Rice. 1.10. In fourth normal form, the monogie-to-many relation between PERSON and SCHOOL resolved by introducing an associative entity, in which a separate entry is assigned for each unique combination SCHOOLS and PERSONS.

Formal definitions of normal forms

The following definitions of normal forms may seem intimidating. Think of them simply as formulas for achieving normalization. Normal forms are based on relational algebra and can be interpreted as mathematical transformations. Although this book does not cover a detailed discussion of normal forms, modellers are encouraged to delve deeper into the subject.

In a given relation R, attribute Y is functionally dependent on attribute X. Symbolically, RX -> RY (read "RX functionally defines RY") if and only if each X value in R is associated with exactly one Y value in R (at any given time). Attributes X and Y can be compound (Date K.J. Introduction to Database Systems. 6th edition. Ed. Williams: 1999, 848 pp.).

A relation R is in first normal form (1NF) if and only if all its domains contain only atomic values (Date, ibid.).

A relation R is in second normal form (2NF) if and only if it is in 1NF and every non-key attribute is completely dependent on the primary key (Date, ibid.).

A relation R is in third normal form (3NF) if and only if it is in 2NF and every non-key attribute is not transitively dependent on the primary key (Date, ibid.).

The relation R conforms to Boyce-Codd normal form (BCNF) if and only if each determinant is a candidate for use as a key.

NOTE Below is a brief explanation of some of the abbreviations used in Date's definitions.

MVD (multi-valued dependency) - multi-valued dependency. Used only for entities with three or more attributes. In a multivalued dependency, the value of an attribute depends on only part of the primary key.

FD (functional dependency) - functional dependency. In a functional dependency, the value of an attribute depends on the value of another attribute that is not part of the primary key.

JD (join dependency) - join dependency. In a join dependency, the primary key of the parent entity is traceable to at least the third level of descendants while retaining the ability to be used in the original key join.

A relation is in fourth normal form (4NF) if and only if there is an MVD in R, such as A®®B. In this case, all attributes of R are functionally dependent on A. In other words, in R there are only dependencies (FD or MVD) of the form K®X (ie, the functional dependence of the attribute X on the candidate for use as a key K). Accordingly, R meets the requirements of 4NF if it complies with BCNF and all MVDs are in fact FDs (Date, ibid.).

For the fifth normal form, the relation R satisfies the union relation (JD)*(X, Y, …, Z) if and only if R is equivalent to its projections onto X, Y,..., Z, where X, Y,. .., Z subsets of the set of attributes R.

There are many other normal forms for complex data types and specific situations that are beyond the scope of our discussion. Every enthusiast of model development would like to explore other normal forms.

Business Normal Forms

In his book Clive Finklestein (Finklestein Cl. An Introduction to Information Engineering: From Strategic Planning to Information Systems. Reading, Massachusetts: Addison-Wesley, 1989) took a different approach to normalization. It defines business normal forms in terms of reductions to those forms. Many modellers find this approach to be more intuitive and pragmatic.

First Business Normal Form (1BNF) maps repeating groups to another entity. This entity gets its own name and primary (composite) key attributes from the original entity and its repeating group.

Second Business Normal Form (2BNF) maps attributes that partially depend on a primary key to another entity. The primary (composite) key of this entity is the primary key of the entity it originally resided in, along with additional keys on which the attribute is entirely dependent.

The third business normal form (3BNF) moves attributes that do not depend on the primary key to another entity, where they are completely dependent on the primary key of this entity.

Fourth Business Normal Form (4BNF) maps attributes that depend on the value of the primary key or are optional to a secondary entity, where they depend entirely on the value of the primary key, or where they must (mandatory) be present in that entity.

Fifth Business Normal Form (5BNF) appears as a structural entity if there is a recursive or other dependency between instances of a secondary entity, or if a recursive dependency exists between instances of its primary entity.

Completed logical data model

The completed logical model must satisfy the requirements of the third business normal form and include all entities, attributes, and relationships necessary to support the data requirements and business rules associated with the data.

All entities must have names that describe the content and a clear, concise, complete description or definition. In one of the following publications, an initial set of recommendations for the correct formation of names and descriptions of entities will be considered.

Entities must have a complete set of attributes, so that every fact about each entity can be represented by its attributes. Each attribute must have a name that reflects its values, a boolean data type, and a clear, short, complete description or definition. In one of the following publications, we will consider the initial set of recommendations for the correct formation of names and descriptions of attributes.

Relationships should include a verb construction that describes the relationship between entities, along with characteristics such as plurality, the need for existence, or the possibility of non-existence of the relationship.

NOTE Plurality relationships describes the maximum number of secondary entity instances that can be associated with an instance of the original entity.The need for existence orpossibility of absence relationship is used to define the minimum number of instances of a secondary entity that can be associated with an instance of the original entity.

Physical data model

After creating a complete and adequate logical model, you are ready to make a decision on the choice of implementation platform. The choice of platform depends on the requirements for data use and the strategic principles of the organization's architecture. Platform selection is a complex issue that is beyond the scope of this book.

In ERwin, the physical model is a graphical representation of the actual database. The physical database will consist of tables, columns, and relationships. The physical model depends on the platform chosen for implementation and the data usage requirements. The physical model for IMS will be very different from the same model for Sybase. The physical model for OLAP reports will look different than the model for OLTP (Online Transaction Processing).

The data modeler and the database administrator (DBA) use the logical model, usage requirements, and corporate architecture strategic principles to develop the physical data model. You can denormalize the physics model to improve performance, and create views to support usage requirements. The following sections detail the process of denormalization and view creation.

This section provides an overview of the process of building a physical model, collecting requirements for using data, and defining the components of a physical model and reverse engineering. These issues will be covered in more detail in future publications.

Collection of data usage requirements

Typically, you collect data usage requirements early on during interviews and work sessions. At the same time, the requirements should define the use of data by the user as fully as possible. Superficial attitude and gaps in the physical model can lead to unplanned costs and delay the project. Usage requirements include:

Access and performance requirements

Volumetric characteristics (an estimate of the amount of data to be stored), which allow the administrator to represent the physical volume of the database

Estimating the number of users who need concurrent access to data to help you design your database for an acceptable level of performance

Summary, summary, and other calculated or derived data that may be considered candidates for storage in durable data structures

Requirements for generating reports and standard queries to help the database administrator build indexes

Views (permanent or virtual) that will assist the user in performing data aggregation or filtering operations.

In addition to the chairperson, secretary, and users, the usage requirements session should include the modeler, database administrator, and database architect. User requirements for historical data should be discussed. The length of time that data is stored has a significant impact on the size of the database. Often, older data is stored in aggregate form, and atomic data is archived or deleted.

Users should bring sample queries and reports with them to the session. Reports must be strictly defined and must include atomic values used for any summary and summary fields.

Components of the physical data model

The components of the physical data model are tables, columns, and relationships. Entities in the logical model are likely to become tables in the physical model. Boolean attributes will become columns. Logical relationships will become constraints on the integrity of relationships. Some logical relationships cannot be implemented in a physical database.

reverse engineering

When the logical model is not available, it becomes necessary to recreate the model from the existing database. At ERwin, this process is called reverse engineering. Reverse engineering can be done in several ways. The modeler can explore the data structures in the database and recreate the tables in a visual modeling environment. You can import data definition language (DDL) into a tool that supports reverse engineering (eg Erwin). Advanced tools such as ERwin include functions that provide ODBC communication with an existing database to create a model by directly reading data structures. Reverse engineering using ERwin will be discussed in detail in a future publication.

Use of corporate functional boundaries

When building a logical model, it is important for the modeler to ensure that the new model matches the enterprise model. Using corporate functional boundaries means modeling data in terms used within the corporation. The way data is used in a corporation is changing faster than the data itself. In each logical model, the data must be represented holistically, regardless of the business domain it supports. Entities, attributes, and relationships should define business rules at the corporate level.

NOTE Some of my colleagues refer to these corporate functional boundaries as real-world modeling. Real-world modeling encourages the modeler to view information in terms of its real relationships and relationships.

The use of corporate functional boundaries for a properly constructed data model provides a framework to support the information needs of any number of processes and applications, enabling a corporation to more effectively exploit one of its most valuable assets, information.

What is an enterprise data model?

Enterprise Data Model (EDM) contains entities, attributes, and relationships that represent the information needs of a corporation. EDM is usually subdivided into subject areas, which are groups of entities related to supporting specific business needs. Some subject areas may cover specific business functions such as contract management, while others may group entities that describe products or services.

Each logical model must correspond to an existing enterprise data model domain. If the logical model does not meet this requirement, a model that defines the subject area must be added to it. This comparison ensures that the corporate model is improved or adjusted and all logical modeling efforts are coordinated within the corporation.

EDM also includes specific entities that define the scope of values for key attributes. These entities have no parents and are defined as independent. Independent entities are often used to maintain the integrity of relationships. These entities are identified by several different names, such as code tables, reference tables, type tables, or classification tables. We will use the term "corporate business object". An enterprise business object is an entity that contains a set of attribute values that are independent of any other entity. Enterprise business objects within a corporation should be used consistently.

Building an Enterprise Data Model by Scaling

There are organizations where the corporate model from start to finish was built as a result of a single concerted effort. On the other hand, most organizations build fairly complete enterprise models by building up.

Growth means building something sequentially, layer by layer, just like an oyster grows a pearl. Each data model created provides input to the formation of the EDM. Building an EDM in this way requires additional modeling steps to add new data structures and domains or extend existing data structures. This makes it possible to build an enterprise data model by building up, iteratively adding levels of detail and refinement.

The concept of modeling methodology

There are several methodologies for visual data modeling. ERwin supports two:

IDEF1X (Integration Definition for Information Modeling - integrated description of information models).

IE (Information Engineering - information engineering).

IDEF1X is a good methodology and its notation is widely used

Integrated description of information models

IDEF1X is a highly structured data modeling methodology that extends the IDEF1 methodology adopted as a FIPS (Federal Information Processing Standards) standard. IDEF1X uses a highly structured set of modeling construct types and results in a data model that requires an understanding of the physical nature of the data before such information can be made available.

The rigid structure of IDEF1X forces the modeler to assign characteristics to entities that may not correspond to the realities of the world around them. For example, IDEF1X requires all entity subtypes to be exclusive. This leads to the fact that a person cannot be both a client and an employee. While real practice tells us otherwise.

Information engineering

Clive Finklestein is often called the father of information engineering, although James Martin shared similar concepts with him (Martin, James. Managing the Database Environment. Upper Saddle River, New Jersey: Prentice Hall, 1983.). Information engineering uses a business driven approach to manage information and uses a different notation to represent business rules. IE serves as an extension and development of the notation and basic concepts of the ER methodology proposed by Peter Chen.

IE provides the infrastructure to support information requirements by integrating corporate strategic planning with the information systems being developed. Such integration makes it possible to more closely link the management of information resources with the long-term strategic prospects of the corporation. This business-driven approach leads many modelers to choose IE over other methodologies that primarily focus on solving immediate development problems.

IE provides a workflow that leads a corporation to identify all of its information needs to collect and manage data and identify relationships between information objects. As a result, information requirements are articulated based on management directives and can be directly translated into a management information system that will support strategic information needs.

Conclusion

Understanding how to use a data modeling tool like ERwin is only part of the problem. In addition, you must understand when data modeling tasks are performed and how information requirements and business rules are collected to be represented in the data model. Conducting work sessions provides the most favorable conditions for collecting information requirements in an environment that includes subject matter experts, users, and information technology specialists.

Building a good data model requires the analysis and research of information requirements and business rules collected during work sessions and interviews. The resulting data model should be compared to the enterprise model, if possible, to ensure that it does not conflict with existing object models and includes all required objects.

The data model consists of logical and physical models representing information requirements and business rules. The logical model must be reduced to the third normal form. Third normal form limits, adds, updates, and removes data structure anomalies to support the "one fact, one place" principle. The collected information requirements and business rules should be analyzed and researched. They need to be compared with the enterprise model to ensure that they do not conflict with existing object models and that they include all required objects.

V ERwin model data includes both logical and physical models. ERwin implements the ER approach and allows you to create logical and physical model objects to represent information requirements and business rules. Logical model objects include entities, attributes, and relationships. Physical model objects include tables, columns, and relationship integrity constraints.

In one of the following publications, the issues of identifying entities, determining entity types, choosing entity names and descriptions, as well as some tricks to avoid the most common modeling errors associated with the use of entities, will be considered.

Entities must have a complete set of attributes, so that every fact about each entity can be represented by its attributes. Each attribute must have a name that reflects its values, a boolean data type, and a clear, short, complete description or definition. In one of the following publications, we will consider the initial set of recommendations for the correct formation of names and descriptions of attributes. Relationships should include a verb construction that describes the relationship between entities, along with characteristics such as plurality, the need for existence, or the possibility of non-existence of the relationship.

NOTE Plurality relationships describes the maximum number of secondary entity instances that can be associated with an instance of the original entity.The necessity of existence or the possibility of absence relationship is used to determine the minimum number of instances of a secondary entity that can be associated with an instance of the original

Increasingly, IT professionals are turning their attention to data management solutions based on industry standard data models and business decision templates. Ready-to-load complex physical data models and business intelligence reports for specific areas of activity allow you to unify the information component of the enterprise and significantly speed up business processes. Solution templates allow service providers to leverage the power of non-standard information hidden in existing systems, thereby reducing project timelines, costs and risks. For example, real projects show that data model and business decision templates can reduce development effort by 50%.

An industry logical model is a domain-specific, integrated and logically structured view of all the information that must be in a corporate data warehouse to answer both strategic and tactical business questions. The main purpose of models is to facilitate orientation in the data space and help in highlighting the details that are important for business development. In today's business environment, it is absolutely essential to have a clear understanding of the relationships between the various components and a good understanding of the big picture of the organization. Identification of all the details and relationships using models allows the most efficient use of time and tools for organizing the work of the company.

Data models are abstract models that describe how data is represented and accessed. Data models define data elements and relationships between them in a given area. A data model is a navigational tool for both business and IT professionals that uses a specific set of symbols and words to accurately explain a specific class of real information. This improves communication within the organization and thus creates a more flexible and stable application environment.

An example of a GIS for authorities and local self-government model.

Today, it is strategically important for software and service providers to be able to quickly respond to changes in the industry associated with technological innovations, the removal of government restrictions and the complexity of supply chains. Along with changes in the business model, the complexity and cost of information technology necessary to support the activities of the company is growing. Data management is especially difficult in an environment where corporate information systems and their functional and business requirements are constantly changing.

To help facilitate and optimize this process, in translating the IT approach to the modern level, industry data models are called upon.

Industry data models from the companyEsri

Data models for the Esri ArcGIS platform are working templates for use in GIS projects and creating data structures for various application areas. Building a data model involves creating a conceptual design, logical structure, and physical structure that can then be used to build a personal or corporate geodatabase. ArcGIS provides tools for creating and managing a database schema, and data model templates are used to quickly launch a GIS project across a variety of applications and industries. Esri, along with the user community, has spent a significant amount of time developing a number of templates that can help you quickly start designing an enterprise geodatabase. These projects are described and documented at support.esri.com/datamodels . Below, in the order in which they appear on this site, are the semantic translations of Esri's industry model names:

- Address register

- Agriculture

- Meteorology

- Basic Spatial Data

- Biodiversity

- Inner space of buildings

- Greenhouse gas accounting

- Maintenance of administrative boundaries

- Military establishment. Intelligence service

- Energy (including the new ArcGIS MultiSpeak protocol)

- Ecological buildings

- Ministry of Emergency Situations. fire protection

- Forest cadastre

- Forestry

- Geology

- National level GIS (e-gov)

- Groundwater and waste water

- healthcare

- Archeology and protection of memorial sites

- National security

- Hydrology

- International Hydrographic Organization (IHO). S-57 format for ENC

- Irrigation

- Land Registry

- municipal government

- Maritime navigation

- State cadastre

- Oil and gas structures

- Pipelines

- Raster stores

- Bathymetry, seabed topography

- Telecommunications

- Transport

- Plumbing, sewerage, utilities

These models contain all the necessary features of the industry standard, namely:

- are freely available;

- are not tied to the technology of the "selected" manufacturer;

- created as a result of the implementation of real projects;

- created with the participation of industry experts;

- designed to provide information interaction between various products and technologies;

- do not contradict other standards and regulatory documents;

- used in implemented projects around the world;

- are designed to work with information throughout the life cycle of the system being created, and not the project itself;

- expandable to the needs of the customer without losing compatibility with other projects and / or models;

- accompanied by additional materials and examples;

- used in guidelines and technical materials of various industrial companies;

- a large community of participants, while access to the community is open to everyone;

- a large number of references to data models in publications in recent years.

Esri is part of an expert group of independent bodies that recommend various industry models for use, such as PODS (Pipeline Open Data Standards - an open standard for the oil and gas industry; there is currently an implementation of PODS as an Esri PODS Esri Spatial 5.1.1 geodatabase) or a geodatabase (GDB) from ArcGIS for Aviation that takes into account ICAO and FAA recommendations, as well as the AIXM 5.0 navigation data exchange standard. In addition, there are recommended models that strictly adhere to existing industry standards, such as S-57 and ArcGIS for Maritime (marine and coastal features), as well as models created from the work of Esri Professional Services and are "de facto" standards in the relevant areas. For example, GIS for the Nation and Local Government have influenced the NSDI and INSPIRE standards, while Hydro and Groundwater are heavily used in the freely available ArcHydro professional package and commercial products. third firms. It should be noted that Esri also supports "de facto" standards such as NHDI. All proposed data models are documented and ready for use in enterprise IT processes. Accompanying materials for the models include:

- UML diagrams of entity relationships;

- data structures, domains, directories;

- ready-made geodatabase templates in ArcGIS GDB format;

- sample data and sample applications;

- examples of data loading scripts, examples of analysis utilities;

- reference books on the proposed data structure.

Esri summarizes its experience in building industry models in the form of books and localizes published materials. Esri CIS has localized and published the following books:

- Geospatial Service Oriented Architecture (SOA);

- Designing geodatabases for transport;

- Corporate geoinformation systems;

- GIS: new energy of electric and gas enterprises;

- Oil and gas on a digital map;

- Modeling our world. Esri Geodatabase Design Guide;

- Thinking about GIS. GIS planning: a guide for managers;

- Geographic information systems. Basics;

- GIS for administrative and economic management;

- Web GIS. Principles and application;

- Systems Design Strategies, 26th edition;

- 68 issues of the ArcReview magazine with publications by companies and users of GIS systems;

- ... and many other thematic notes and publications.

For example, the book Modeling our world..."(translation) is a comprehensive guide and reference guide to GIS data modeling in general, and the geodatabase data model in particular. The book shows how to make the right data modeling decisions, decisions that are involved in every aspect of a GIS project: from database design data and data collection to spatial analysis and visualization Describes in detail how to design a geographic database appropriate for the project, set up database functionality without programming, manage workflow in complex projects, model a variety of network structures such as river, transport or electric networks, integrate satellite imagery data into geographic analysis and mapping, and create 3D GIS data models. Designing geodatabases for transportation" contains methodological approaches that have been tested on a large number of projects and fully comply with the legislative requirements of Europe and the United States, as well as international standards. And in the book " GIS: new energy of electric and gas enterprises Using real-world examples, it shows the benefits that an enterprise GIS can bring to an energy supplier, including aspects such as customer service, network operation, and other business processes.

Some of the books, translated and original, published in Russian by Esri CIS and DATA+. They cover both conceptual issues related to GIS technology and many applied aspects of modeling and deploying GIS of various scales and purposes.

We will consider the use of industry models using the BISDM (Building Interior Space Data Model) version 3.0 data model as an example. BISDM is a development of a more general BIM model (Building Information Model, building information model) and is intended for use in the design, construction, operation and decommissioning of buildings and structures. Used in GIS software, allows you to effectively exchange geodata with other platforms and interact with them. Refers to the general task group FM (organization infrastructure management). We list the main advantages of the BISDM model, the use of which allows:

- organize the exchange of information in a heterogeneous environment according to uniform rules;

- get a "physical" embodiment of the BIM concept and the recommended rules for managing a construction project;

- maintain a single repository using GIS tools throughout the entire life cycle of the building (from design to decommissioning);

- coordinate the work of various specialists in the project;

- visualize the planned schedule and construction stages for all participants;

- give a preliminary estimate of the cost and construction time (4D and 5D data);

- control the progress of the project;

- ensure the quality operation of the building, including maintenance and repairs;

- become part of the asset management system, including the functions of analyzing the efficiency of space use (renting, storage facilities, employee management);

- calculate and manage the energy efficiency of the building;

- simulate the movement of human flows.

BISDM defines the rules for working with spatial data at the level of internal premises in a building, including the purpose and types of use, laid communications, installed equipment, accounting for repairs and maintenance, logging incidents, relationships with other company assets. The model helps to create a unified repository of geographic and non-geographic data. The experience of the world's leading companies was used to isolate entities and model at the GDB level (geodatabase) the spatial and logical relationships of all physical elements that form both the building itself and its interior. Following the principles of BISDM allows you to significantly simplify the tasks of integration with other systems. At the first stage, this is usually integration with CAD. Then, during the operation of the building, data exchange with ERP and EAM systems (SAP, TRIRIGA, Maximo, etc.) is used.

Visualization of BISDM structural elements using ArcGIS.

In the case of using BISDM, the customer / owner of the facility receives an end-to-end exchange of information from the idea of creating a facility to the development of a complete project, construction control with obtaining up-to-date information by the time the facility is put into operation, control of parameters during operation, and even during reconstruction or decommissioning of the facility. Following the BISDM paradigm, the GIS and the GDB created with its help become a common data repository for related systems. Often in the GDB there are data created and operated by third-party systems. This must be taken into account when designing the architecture of the system being created.

At a certain stage, the accumulated "critical mass" of information allows you to move to a new qualitative level. For example, upon completion of the design phase of a new building, it is possible to automatically visualize 3D survey models in GIS, compile a list of equipment to be installed, calculate the kilometers of engineering networks to be laid, perform a number of verifications, and even give a preliminary financial estimate of the project cost.

Once again, when using BISDM and ArcGIS together, it becomes possible to automatically build 3D models from the accumulated data, since the GDB contains a complete description of the object, including z-coordinates, belonging to a floor, types of element connections, equipment installation methods, material, available paths personnel movements, functional purpose of each element, etc. etc. It should be noted that after the initial import of all design materials into the BISDM GDB, there is a need for additional content for:

- placement of 3D models of objects and equipment at designated places;

- collecting information about the cost of materials and the procedure for their laying and installation;

- patency control according to the dimensions of the installed non-standard equipment.

Through the use of ArcGIS, the import of additional 3D objects and reference books from external sources is simplified. The ArcGIS Data Interoperability module allows you to create procedures for importing such data and placing it correctly within the model. All formats used in the industry are supported, including IFC, AutoCAD Revit, Bentlye Microstation.

Industry data models from IBM

IBM provides a set of storage management tools and models for a variety of industries:

- IBM Banking and Financial Markets Data Warehouse (finance)

- IBM Banking Data Warehouse

- IBM Banking Process and Service Models

- IBM Health Plan Data Model (health)

- IBM Insurance Information Warehouse (insurance)

- IBM Insurance Process and Service Models

- IBM Retail Data Warehouse (retail)

- IBM Telecommunications Data Warehouse (telecommunications)

- InfoSphere Warehouse Pack:

- for Customer Insight (to understand customers)

- for Market and Campaign Insight (to understand the company and the market)

- for Supply Chain Insight (for understanding suppliers).

For example, model IBMbankingandFinancialmarketsDataWarehouse designed to address the specific challenges of the banking industry in terms of data, and IBMbankingprocessandServiceModels- in terms of processes and SOA (service-oriented architecture). Models presented for the telecommunications industry IBMInformationFrameWork(IFW) and IBMTelecommunicationsDataWarehouse (TDW). They help to significantly speed up the process of creating analytical systems, as well as reduce the risks associated with the development of business intelligence applications, corporate data management and organization of data warehouses, taking into account the specifics of the telecommunications industry. IBM TDW capabilities cover the entire spectrum of the telecommunications market - from Internet providers and cable network operators offering wired and wireless telephony services, data transmission and multimedia content, to multinational companies providing telephone, satellite, long-distance and international communication services, as well as organizations global networks. Today, TDW is used by large and small wired and wireless service providers around the world.

The tool called InfoSphere Warehouse Pack for Customer Insight is a structured and easily implemented business content for a growing number of business projects and industries, including banking, insurance, finance, health insurance programs, telecommunications, retail and distribution. For business users InfoSphere Warehouse Pack for Market and Campaign Insight helps you maximize the effectiveness of your market intelligence and marketing campaigns through a step-by-step development and business-specific process. Via InfoSphere Warehouse Pack for Supply Chain Insight organizations have the ability to obtain current information on supply chain operations.

Esri's position within the IBM solution architecture.

Of particular note is IBM's approach to utilities and utility companies. To meet growing customer demands, utility companies need a more flexible architecture than they use today, as well as an industry-standard object model to facilitate the free exchange of information. This will improve the communication capabilities of energy companies, enabling them to communicate more cost-effectively, and give the new systems better visibility into all required resources, no matter where they are located within the organization. The basis for this approach is SOA (Service Oriented Architecture), a component model that establishes a correspondence between the functions of departments and services of various applications that can be reused. The "services" of these components communicate through interfaces without hard binding, hiding from the user the full complexity of the systems behind them. In this mode, enterprises can easily add new applications regardless of the software vendor, operating system, programming language, or other internal characteristics of the software. The concept is implemented on the basis of SOA SAFE ( Solution Architecture for Energy, it enables the utility industry to gain a standards-based, holistic view of their infrastructure.

Esri ArcGIS® is a globally recognized software platform for geographic information systems (GIS), which provides the creation and management of digital assets of electric power, gas transmission, distribution, and telecommunications networks. ArcGIS allows you to conduct the most complete inventory of the components of the electrical distribution network, taking into account their spatial location. ArcGIS significantly extends the IBM SAFE architecture by providing the tools, applications, workflows, analytics, and information and integration capabilities needed to manage the smart grid. ArcGIS within IBM SAFE allows you to obtain information from various sources about infrastructure objects, assets, customers and employees with accurate data about their location, as well as create, store and process geo-referenced information about enterprise assets (pillars, pipelines, wires, transformers, cable ducts etc.). ArcGIS within a SAFE infrastructure allows you to dynamically combine key business applications by combining data from GIS, SCADA, and customer service systems with external information such as traffic, weather conditions, or satellite imagery. Utilities use this combined information for a variety of purposes, from C.O.R. (big picture of the operational environment) to site inspections, maintenance, network analysis and planning.

The information components of a power supply enterprise can be modeled using several levels, which range from the lowest - physical - to the top, most complex level of business process logic. These layers can be integrated to meet typical industry requirements such as automated measurement logging and supervisory control and data acquisition (SCADA) control. By building the SAFE architecture, utility companies are making significant strides in advancing an industry-wide open object model called the Common Information Model (CIM) for Energy and Utilities. This model provides the necessary basis for moving many enterprises towards a service-oriented architecture, as it encourages the use of open standards for structuring data and objects. By having all systems use the same objects, the confusion and inelasticity associated with different implementations of the same objects will be reduced to a minimum. Thus, the definition of the “customer” object and other important business objects will be unified in all systems of the power supply company. With CIM, service providers and service consumers can now share a common data structure, making it easier to outsource costly business components as CIM establishes a common base on which to build information sharing.

Conclusion

Comprehensive industry data models provide companies with a single, integrated view of their business information. Many companies find it difficult to integrate their data, although this is a prerequisite for most enterprise-wide projects. According to a study by The Data Warehousing Institute (TDWI), more than 69% of organizations surveyed found integration to be a significant barrier to new application adoption. On the contrary, the implementation of data integration brings the company tangible income and increased efficiency.

A well-built model uniquely defines the meaning of the data, which in this case is structured data (as opposed to unstructured data such as an image, binary file, or text, where the value can be ambiguous). The most effective industry models are offered by professional vendors, including Esri and IBM. The high returns from using their models are achieved due to their significant level of detail and accuracy. They usually contain many data attributes. In addition, experts from Esri and IBM not only have extensive modeling experience, but are also well versed in building models for a particular industry.

Database architecture

The CMD schema is a description of the structure of the data model from the point of view of the administrator.

A AMD schema is a description of an internal or physical model. It stores a description of the physical location of the data on the media. The scheme stores direct indications of the location of data in memory (volumes, disks).

The CMD schema describes the structure of data, records, and fields.

All DBMS support three main types of data models:

1. Hierarchical model. It assumes some root entry. Branches come from the roots.

Not all objects are conveniently described in this way. There are no connections in the hierarchy and a large redundancy of information is characteristic.

2. Network model. Allows you to correctly display all the complexities of relationships.

The model is convenient for representing links with data from the external environment, but less convenient for describing in the database, which leads to additional work for the user to study the navigation through the links.

3. relational model. It is based on the mathematical term Relation - a relation, but simply - a table. For example, a rectangular two-dimensional.

The relational data structure was developed in the late 60s by a number of researchers, of which the most significant contribution was made by IBM employee Edgar Codd. With the relational approach, data is presented in the form of two-dimensional tables - the most natural for a person. At the same time, for data processing, Codd suggested using the apparatus of set theory - union, intersection, difference, Cartesian product.

Data type- this concept has the same meaning as in programming languages (i.e., the data type defines the internal representation in computer memory and the way the data instance is stored, as well as the set of values that the data instance can take and the set of valid data operations). All existing modern databases support special types of data designed to store data of an integer type, fractional floating point, characters and strings, calendar dates. Many database servers implement other types, for example, the Interbase server has a special data type for storing large binary information arrays (BLOBs).

Domain is a potential set of values of a simple data type, it is similar to a data subtype in some programming languages. The domain is defined by two elements - the data type and boolean expression The that is applied to the data. If this expression evaluates to true, then the data instance belongs to the domain.

Attitude is a two-dimensional table of a special kind, consisting of a header and a body.

header is a fixed set of attributes, each of which is defined on some domain, and there is a one-to-one correspondence between attributes and defining domains.

Each attribute is defined on its own domain. The domain is an integer data type, and the boolean condition is n>0. The heading is timeless, unlike the relation body. Relationship body- is a collection tuples, each of which is an attribute-value pair.

By the power of the relationship is the number of its tuples, and degree of attitude is the number of attributes.

The degree of a ratio is a constant value for a given ratio, while the power of a ratio varies with time. The power of the ratio is also called the cardinal number.

The above concepts are theoretical and are used in the development of language tools and software systems for relational DBMS. In everyday work, their informal equivalents are used instead:

attitude - table;

attribute - column or field;

tuple - record or line.

Thus, the degree of the relation is the number of columns in the table, and the cardinal number is the number of rows.

Since a relation is a set, and in classical set theory, by definition, a set cannot contain matching elements, a relation cannot have two identical tuples. Therefore, for a given relation, there is always a set of attributes that uniquely identify a tuple. This set of attributes is called key.

The key must meet the following requirements:

must be unique;

· must be minimal, that is, the removal of any attribute from the key leads to a violation of uniqueness.

As a rule, the number of attributes in a key is less than the degree of the relation, however, in extreme cases, the key may contain all attributes, since the combination of all attributes satisfies the uniqueness condition. Typically, a relation has multiple keys. Of all the keys of the relationship (they are also called " possible keys”) one is chosen as primary key. When choosing primary key preference is usually given to the key with the least number of attributes. It is also inappropriate to use keys with long string values.