OS unix. Hardware architecture

UNIX has a long and interesting history. Starting as a frivolous and almost "toy" project of young researchers, UNIX has become a multi-million dollar industry, including universities, multinational corporations, governments, and international standards organizations in its orbit.

UNIX originated in AT&T's Bell Labs more than 20 years ago. At the time, Bell Labs was developing a multi-user time sharing system, MULTICS (Multiplexed Information and Computing Service), with MIT and General Electric, but this system failed, partly because of too ambitious goals that did not correspond to the level of computers of that time, and partly due to due to the fact that it was developed in PL/1, and the PL/1 compiler was delayed and did not perform well after its belated appearance. Therefore, Bell Labs refused to participate in the MULTICS project at all, which made it possible for one of its researchers, Ken Thompson, to do research work towards improving the Bell Labs operating environment. Thompson, along with Bell Labs employee Denis Ritchie and several others, were developing a new file system, many of whose features were derived from MULTICS. To check new file system Thompson wrote the OS kernel and some programs for the GE-645 computer, which ran the GECOS multiprogram time-sharing system. Ken Thompson had a game he wrote while working on MULTICS called "Space Travel". He ran it on a GE-645 computer, but it didn't work very well on him because of the poor time-sharing efficiency. In addition, the GE-645's machine time was too expensive. As a result, Thompson and Ritchie decided to port the game to a DEC PDP-7 machine sitting idle in the corner, which has 4096 18-bit words, a teletypewriter, and a good graphic display. But the PDP-7 had poor software, and after finishing porting the game, Thompson decided to implement on the PDP-7 the file system he had been working on on the GE-645. It was from this work that the first version of UNIX emerged, although it had no name at the time. But it already included the typical UNIX inode-based file system, had a process and memory management subsystem, and allowed two users to work in time-sharing mode. The system was written in assembler. The name UNIX (Uniplex Information and Computing Services) was given to it by another Bell Labs employee, Brian Kernighan, who originally called it UNICS, emphasizing its difference from the multi-user MULTICS. Soon UNICS began to be called UNIX.

The first users of UNIX were employees of the Bell Labs patent department, who found it a convenient environment for creating texts.

The fate of UNIX was greatly influenced by its census in the language high level C, developed by Denis Ritchie specifically for this purpose. This happened in 1973, by which time UNIX had 25 installations, and a special UNIX support group was created at Bell Labs.

UNIX has been widely used since 1974, after the description of this system by Thompson and Ritchie in the computer magazine CACM. UNIX was widely adopted by universities, as it was supplied free of charge along with C source codes for them. The widespread use of efficient C compilers made UNIX unique at that time as an operating system due to its portability to various computers. Universities have made a significant contribution to the improvement of UNIX and its further popularization. Another step towards gaining recognition for UNIX as a standardized environment was the development of the stdio I/O library by Denis Ritchie. By using this library for the C compiler, UNIX programs have become highly portable.

Rice. 5.1. History of UNIX development

The widespread use of UNIX has given rise to the problem of incompatibility among its many versions. Obviously, it is very frustrating for the user that a package purchased for one version of UNIX refuses to work on another version of UNIX. Periodically, attempts have been made and are being made to standardize UNIX, but so far they have met with limited success. The process of convergence of different versions of UNIX and their divergence is cyclical. In the face of a new threat from some other operating system, various UNIX vendors converge their products, but then competition forces them to make original improvements and versions diverge again. There is also a positive side to this process - the emergence of new ideas and tools that improve both UNIX and many other operating systems that have adopted a lot of useful things from it over the long years of its existence.

Figure 5.1 shows a simplified picture of the development of UNIX, which takes into account the succession of various versions and the influence of adopted standards on them. There are two widely disparate lines of UNIX versions in widespread use: the AT&T-UNIX System V line, and the Berkeley-BSD university line. Many companies have developed and maintained their versions of UNIX based on these versions: Sun Microsystems' SunOS and Solaris, Hewlett-Packard's UX, Microsoft's XENIX, IBM's AIX, Novell's UnixWare (now sold to SCO), and the list goes on. continue.

Standards such as AT&T's SVID, IEEE's POSIX, and the X/Open consortium's XPG4 have had the greatest influence on the unification of UNIX versions. These standards define the requirements for an interface between applications and the operating system to enable applications to run successfully on different versions of UNIX.

Regardless of the version, the common features for UNIX are:

- multi-user mode with means of protecting data from unauthorized access,

- implementation of multiprogram processing in time-sharing mode, based on the use of preemptive multitasking algorithms,

- using virtual memory and swap mechanisms to increase the level of multiprogramming,

- unification of I / O operations based on the extended use of the concept of "file",

- a hierarchical file system that forms a single directory tree regardless of the number of physical devices used to place files,

- portability of the system by writing its main part in C,

- various means of interaction between processes, including through the network,

- disk caching to reduce average file access time.

UNIX System V Release 4 is an unfinished commercial version of the operating system. its codes lack many of the system utilities necessary for the successful operation of the OS, such as administration utilities or a GUI manager. The SVR4 version is more of a standard implementation of the kernel code, incorporating the most popular and efficient solutions from various versions of the UNIX kernel, such as the VFS virtual file system, memory-mapped files, and so on. The SVR4 code (partially modified) formed the basis of many modern commercial versions of UNIX, such as HP-UX, Solaris, AIX, and so on.

Introduction

What is Unix?

Where to get free Unix?

What are the main differences between Unix and other OSes?

Why Unix?

Basic Unix Concepts

File system

command interpreter

Manuals - man

Introduction

Writing about the Unix operating system is extremely difficult. Firstly, because a lot has been written about this system. Secondly, because the ideas and decisions of Unix have had and are having a huge impact on the development of all modern operating systems, and many of these ideas are already described in this book. Thirdly, because Unix is not one operating system, but a whole family of systems, and it is not always possible to "track" their relationship to each other, and it is simply impossible to describe all the operating systems included in this family. Nevertheless, without claiming to be complete in any way, we will try to give a cursory overview of the "Unix world" in those areas of it that seem interesting to us for the purposes of our training course.

The birth of the Unix operating system dates back to the end of the 60s, and this story has already acquired "legends", which sometimes tell in different ways about the details of this event. The Unix operating system was born at the Bell Telephone Laboratories (Bell Labs) research center, which is part of the AT&T corporation. Initially, this initiative project for the PDP-7 computer (later - for the PDP-11) was either a file system, or a computer game, or a text preparation system, or both. It is important, however, that from the very beginning the project, which eventually turned into an OS, was conceived as software environment collective use. The author of the first version of Unix is Ken Thompson, however, a large team of employees (D. Ritchie, B. Kernigan, R. Pike and others) took part in the discussion of the project, and subsequently in its implementation. In our opinion, several fortunate circumstances of the birth of Unix determined the success of this system for many years to come.

For most of the people in the team where Unix was born, that OS was "the third system." There is an opinion (see, for example) that a system programmer achieves high qualifications only when completing his third project: the first project is still "student", the second developer tries to include everything that did not work out in the first, and as a result it turns out to be too cumbersome , and only in the third is the necessary balance of desires and possibilities achieved. It is known that before the birth of Unix, the Bell Labs team participated (together with a number of other firms) in the development of the MULTICS OS. The final product MULTICS (Bell Labs did not take part in the last stages of development) bears all the hallmarks of a "second system" and is not widely used. It should be noted, however, that many fundamentally important ideas and decisions were born in this project, and some concepts that many consider to be born in Unix actually originate from the MULTICS project.

The Unix operating system was a system that was made "for myself and for my friends." Unix was not set to capture the market and compete with any product. The developers of the Unix operating system themselves were its users, and they themselves assessed the suitability of the system to their needs. Without the pressure of market conditions, such an assessment could be extremely objective.

Unix was a system that was made by programmers for programmers. This determined the elegance and conceptual harmony of the system - on the one hand, and on the other - the need for understanding the system for a Unix user and a sense of professional responsibility for a programmer developing software for Unix. And no subsequent attempts to make "Unix for Dummies" have been able to rid the Unix OS of this virtue.

In 1972-73. Ken Thompson and Dennis Ritchie wrote new version Unix. Especially for this purpose, D. Ritchie created the C programming language, which is now no longer necessary. Over 90% of the Unix code is written in this language, and the language has become an integral part of the OS. The fact that the main part of the OS is written in a high-level language makes it possible to recompile it into the codes of any hardware platform and is the circumstance that determined the widespread use of Unix.

During the inception of Unix, US antitrust laws prevented AT&T from entering the market. software products. Therefore, the Unix operating system was non-commercial and freely distributed, primarily in universities. There, its development continued, and it was most actively conducted at the University of California at Berkeley. At this university, the Berkeley Software Distribution group was created, which was engaged in the development of a separate branch of the OS - BSD Unix. Throughout subsequent history, mainstream Unix and BSD Unix have evolved in parallel, repeatedly enriching each other.

As the Unix operating system spread, commercial firms became more and more interested in it, which began to release their own commercial versions of this operating system. Over time, the "main" branch of Unix from AT&T became commercial, and a subsidiary of Unix System Laboratory was created to promote it. The BSD Unix branch forked in turn into commercial BSD and Free BSD. Various commercial and free Unix-like systems have been built on top of the AT&T Unix kernel, but have included features borrowed from BSD Unix as well as original features. Despite the common source, differences between members of the Unix family accumulated and eventually made it extremely difficult to port applications from one Unix-like operating system to another. At the initiative of Unix users, there was a movement to standardize the Unix API. This movement was supported by the International Organization for Standards ISO and led to the emergence of the POSIX (Portable Operation System Interface eXecution) standard, which is still being developed and is the most authoritative standard for the OS. However, making POSIX specifications an official standard is a rather slow process and cannot meet the needs of vendors. software leading to the emergence of alternative industry standards.

With the transition of AT&T Unix to Nowell, the name of this operating system changed to Unixware, and the rights to the Unix trademark were transferred to the X / Open consortium. This consortium (now the Open Group) developed its own (wider than POSIX) system specification, known as the Single Unix Specification. The second edition of this standard has recently been released, much better aligned with POSIX.

Finally, a number of firms producing their own versions of Unix formed the Open Software Foundation (OSF) consortium, which released their own version of Unix, OSF/1, based on the Mach microkernel. OSF also released the OSF/1 system specifications, which served as the basis for OSF member firms to release their own Unix systems. These systems include SunOS from Sun Microsystems, AIX from IBM, HP/UX from Hewlett-Packard, DIGITAL UNIX from Compaq, and others.

Initially, the Unix systems of these firms were mostly based on BSD Unix, but now most modern industrial Unix systems are built using (under license) the AT&T Unix System V Release 4 (S5R4) kernel, although they also inherit some properties of BSD Unix. We do not take responsibility for comparing commercial Unix systems, since comparisons of this kind that appear periodically in print often provide completely opposite results.

Nowell sold Unix to Santa Crouse Operations, which produced its own Unix product, SCO Open Server. SCO Open Server was based on more early version kernel (System V Release 3), but was superbly debugged and highly stable. Santa Crouse Operations integrated its product with AT&T Unix and released Open Unix 8, but then sold Unix to Caldera, the owner of "classic" Unix today (late 2001).

Sun Microsystems began its introduction to the Unix world with SunOS, based on the BSD kernel. However, it subsequently replaced it with a Solaris system based on the S5R4. Version 8 of this OS is currently being distributed (there is also v.9-beta). Solaris runs on the SPARC platform (RISC processors manufactured to Sun specifications) and Intel-Pentium.

Hewlett-Packard offers the HP-UX OS. v.11 on the PA-RISC platform. HP-UX is based on S5R4, but contains many features that give away its origins from BSD Unix. Of course, HP-UX will also be available on the Intel-Itanium platform.

IBM comes out with the AIX OS, the latest version to date is 5L (it will be discussed later). IBM did not announce the "pedigree" of AIX, it is mostly original development, but the first versions bore signs of origin from FreeBSD Unix. Now, however, AIX is more like S5R4. Initially, AIX was also available on the Intel-Pentium platform, but subsequently (in accordance with general IBM policy) was no longer supported on this platform. AIX currently runs on IBM RS/6000 servers and other PowerPC-based computing platforms (including IBM supercomputers).

DEC's DIGITAL UNIX was the only commercial implementation of OSF/1. The DIGITAL UNIX OS ran on DEC's Alpha RISC servers. When DEC was taken over by Compaq in 1998, Compaq acquired both Alpha and DIGITAL UNIX servers. Compaq intends to restore its presence in the market of Alpha servers and, in this regard, is intensively developing an OS for them. The current name of this OS is Tru64 Unix (current version is 5.1A), it continues to be based on the OSF/1 kernel and has many BSD Unix features.

Although most commercial Unix systems are based on a single kernel and conform to POSIX requirements, each has its own dialect of API, and differences between dialects are cumulative. This leads to the fact that porting industrial applications from one Unix system to another is difficult and requires, at a minimum, recompilation, and often also correction of the source code. An attempt to overcome the "confusion" and make a single Unix operating system for all was undertaken in 1998 by an alliance of SCO, IBM and Sequent. These firms joined together in the Monterey project to create a single OS based on Unixware, owned at the time by SCO, IBM AIX, and Sequent's DYNIX OS. (Sequent is a leader in the production of NUMA computers - asymmetric multiprocessor - and DYNIX is Unix for such computers). The Monterey OS was to run on the 32-bit Intel-Pentium platform, the 64-bit PowerPC platform, and the new 64-bit Intel-Itanium platform. Nearly all leaders in the hardware and middleware industry have declared their support for the project. Even firms that have their own Unix clones (except Sun Microsystems) have announced that they will only support Monterey on Intel platforms. The work on the project appeared to be going well. Monterey OS was among the first to prove its performance on Intel-Itanium (along with Windows NT and Linux) and the only one that did not emulate the 32-bit Intel-Pentium architecture. However, in the final stage of the project, a fatal event occurred: SCO sold its Unix division. Even earlier, Sequent became part of IBM. The "successor" of all the features of Monterey OS is IBM AIX v.5L OS. However, not quite all. The Intel-Pentium platform is not a strategic focus for IBM and AIX is not available on that platform. And because other leaders in the computer industry don't (or don't quite share) IBM's position, the idea of a common Unix operating system never came to fruition.

The UNIX operating system, the progenitor of many modern operating systems such as Linux, Android, Mac OS X and many others, was created within the walls of the Bell Labs research center, a division of AT&T. Generally speaking, Bell Labs is a real breeding ground for scientists who have made discoveries that literally changed technology. For example, it was at Bell Labs that scientists such as William Shockley, John Bardeen and Walter Brattain worked, who first created the bipolar transistor in 1947. We can say that it was at Bell Labs that the laser was invented, although by that time masers had already been created. Claude Shannon, the founder of information theory, also worked at Bell Labs. The creators of the C language Ken Thompson and Denis Ritchie worked there (we will recall them later), as well as the author of C ++ - Bjarne Stroustrup.

On the way to UNIX

Before talking about UNIX itself, let's remember those Operating Systems that preceded it, and which largely determined what UNIX is, and through it, many other modern operating systems.

The development of UNIX was not the first work in the field of operating systems undertaken at Bell Labs. In 1957, the laboratory began to develop an operating system, which was called BESYS (short for Bell operating system). The project manager was Viktor Vysotsky, the son of a Russian astronomer who emigrated to America. BESYS was an internal project that was not released as a finished commercial product, although BESYS was distributed to everyone on magnetic tapes. This system was designed to run on the IBM 704 - 709x series computers (IBM 7090, 7094). I would like to call these things the antediluvian word of the computer, but, so as not to cut the ear, we will continue to call them computers after all.

IBM 704

First of all, BESYS was intended for batch execution of a large number of programs, that is, in such a way when a list of programs is given, and their execution is scheduled in such a way as to occupy the maximum possible resources so that the computer does not stand idle. At the same time, BESYS already had the rudiments of a time-sharing operating system - that is, in essence, what is now called multitasking. When full-fledged time-sharing systems appeared, this opportunity was used so that several people could work with one computer at the same time, each from their own terminal.

In 1964, Bell Labs upgraded computers, as a result of which BESYS could no longer be launched on new computers from IBM, and there was no question of cross-platform then. Computers at that time were supplied by IBM without operating systems. Developers from Bell Labs could have started writing a new operating system, but they acted differently - they joined the development of the Multics operating system.

The Multics project (short for Multiplexed Information and Computing Service) was proposed by MIT professor Jack Dennis. He, along with his students in 1963, developed a specification for a new operating system and managed to interest representatives of the General Electric company in the project. As a result, Bell Labs joined MIT and General Electric in developing a new operating system.

And the ideas of the project were very ambitious. First, it had to be an operating system with full time sharing. Secondly, Multics was not written in assembler, but in one of the first high-level languages - PL / 1, which was developed in 1964. Thirdly, Multics could run on multiprocessor computers. The same operating system had a hierarchical file system, file names could contain any characters and be quite long, and symbolic links to directories were also provided in the file system.

Unfortunately, work on Multics dragged on for a long time, the Bell Labs programmers did not wait for the release of this product and in April 1969 left the project. And the release took place already in October of the same year, but, they say, the first version was terribly buggy, and for another year the remaining developers fixed the bugs that users reported to them, although a year later Multics was already a more reliable system.

Multics has been in development for quite a long time, the last release was in 1992, and it was version 12.5, although that is a completely different story, but Multics had a huge impact on the future of UNIX.

Birth of UNIX

UNIX appeared almost by accident, and the computer game "Space Travel" was to blame, a space-flying game written by Ken Thompson. It was a distant 1969, the Space Travel game was first designed for the same Multics operating system, and after Bell Labs was cut off access to new versions of Multics, Ken rewrote the game in Fortran and ported it to the GECOS operating system, that came with the GE-635 computer. But here two problems crept in. Firstly, this computer did not have a very good display system, and, secondly, it was expensive to play on this computer - something around $ 50-75 per hour.

But one day, Ken Thompson stumbled upon a DEC PDP-7 computer that was rarely used and might well be suitable for running Space Travel, plus it had a better video processor.

Porting the game to the PDP-7 was not easy, in fact, it required writing a new operating system to run it. This was not the case, to which programmers will not go for the sake of their favorite toy. This is how UNIX, or rather Unics, was born. The name suggested by Brian Kernighan is short for Uniplexed Information and Computing System. Let me remind you that the name Multics comes from the words multiplexed Information and Computing Service, thus, Unics was somewhat opposed to Multics in terms of simplicity. Indeed, Multics was already under attack about its complexity. For comparison, the first versions of the Unics kernel occupied only 12 kB of RAM versus 135 kB for Multics.

Ken Thompson

This time, the developers did not (yet) experiment with high-level languages, and the first version of Unics was written in assembler. Thompson himself, Denis Ritchie, took part in the development of Unics, later Douglas McIlroy, Joey Ossanna and Rad Kennedy joined them. At first, Kernighan, who proposed the name of the OS, provided only moral support.

A little later, in 1970, when multitasking was implemented, the operating system was renamed UNIX and was no longer considered an abbreviation. It is this year that is considered the official year of the birth of UNIX, and it is from January 1, 1970 that the system time(number of seconds since this date). The same date is called more pathetically - the beginning of the UNIX era (in English - UNIX Epoch). Remember, we were all scared by the problem of the year 2000? So, a similar problem awaits us back in 2038, when 32-bit integers, which are often used to determine the date, will not be enough to represent the time, and the time with the date will become negative. I would like to believe that by this time all vital software will use 64-bit variables for this purpose in order to push back this terrible date by another 292 million years, and then we will come up with something. 🙂

By 1971, UNIX was already a full-fledged operating system, and Bell Labs even staked out the UNIX trademark for itself. In the same year, UNIX was rewritten to run on the more powerful PDP-11 computer, and it was in this year that the first official version of UNIX (also called the First Edition) was released.

In parallel with the development of Unics / UNIX, Ken Thompson and Denis Ritchie, starting in 1969, developed a new language B (B), which was based on the BCPL language, which, in turn, can be considered a descendant of the Algol-60 language. Ritchie proposed rewriting UNIX in B, which was portable, albeit interpreted, after which he continued to modify this language for new needs. In 1972, the Second Edition of UNIX came out, which was written almost entirely in B, leaving a rather small module of about 1000 lines in assembler, so porting UNIX to other computers was now relatively easy. This is how UNIX became portable.

Ken Thompson and Dennis Ritchie

The B language then evolved along with UNIX until it gave birth to the C language, one of the most well-known programming languages, which is now widely maligned or praised as an ideal. In 1973, the third edition of UNIX was released with a built-in compiler for the C language, and starting from the 5th version, which was born in 1974, it is believed that UNIX was completely rewritten in C. By the way, it was in UNIX in 1973 that such a concept appeared as pipes (pipes).

Beginning in 1974-1975, UNIX began to spread outside of Bell Labs. Thompson and Ritchie publish a description of UNIX in Communications of the ACM, and AT&T provides UNIX to educational institutions as a learning tool. In 1976, we can say that the first UNIX was ported to another system - to the Interdata 8/32 computer. In addition, in 1975, the 6th version of UNIX was released, starting with which various implementations of this operating system appeared.

The UNIX operating system was so successful that starting in the late 70s, other developers began to make similar systems. Let's now switch from the original UNIX to its clones and see what other operating systems have come out of it.

The advent of BSD

The reproduction of this operating system was largely facilitated by American officials, even before the birth of UNIX, in 1956, who imposed restrictions on AT&T, which owned Bell Labs. The fact is that at that time the Department of Justice forced AT & T to sign an agreement that prohibited the company from engaging in activities not related to telephone and telegraph networks and equipment, but by the 70s, AT & T had already realized what a successful project turned out from UNIX and wanted to make it commercial. In order for officials to allow them to do this, AT&T donated UNIX sources to some American universities.

One of these universities that had access to the body of the source was the University of California at Berkeley, and if there are other people's sources, then involuntarily there is a desire to correct something in the program for themselves, especially since the license did not prohibit this. Thus, a few years later (in 1978), the first non-AT&T UNIX-compatible system appeared. It was BSD UNIX.

UC Berkeley

BSD is short for Berkeley Software Distribution, a special system for distributing software in source code with a very soft license. The BSD license was created just to distribute a new UNIX compatible system. This license allows reuse of the source code distributed under it, and, in addition, unlike the GPL (which did not yet exist), does not impose any restrictions on derivative programs. In addition, it is very short and does not operate with a lot of boring legal terms.

The first version of BSD (1BSD) was more of an addition to the original UNIX version 6 than standalone system. Pascal compiler was added to 1BSD and text editor ex. The second version of BSD, released in 1979, included such well-known programs as vi and the C Shell.

Since the advent of BSD UNIX, the number of UNIX compatible systems has grown exponentially. Already from BSD UNIX, separate branches of operating systems began to sprout, different operating systems exchanged code with each other, the interweaving became quite confusing, so in the future we will not dwell on each version of all UNIX systems, but let's see how the most famous of them appeared.

Perhaps the best-known direct descendants of BSD UNIX are FreeBSD, OpenBSD, and, to a lesser extent, NetBSD. They are all descended from the so-called 386BSD, released in 1992. 386BSD, as the name suggests, was a port of BSD UNIX to the Intel 80386 processor. This system was also created by alumni of the University of Berkeley. The authors felt that the UNIX source code received from AT&T had been modified enough to score on the AT&T license, however, AT&T itself did not think so, so there were litigations around this operating system. Judging by the fact that 386BSD itself became the parent of many other operating systems, everything ended well for it.

The FreeBSD project (in the beginning it did not have its own name) appeared as a set of patches for 386BSD, however, these patches were not accepted for some reason, and then, when it became clear that 386BSD would no longer be developed, in 1993 the project was deployed in the direction of creating a full-fledged operating system, called FreeBSD.

Beastie. FreeBSD Mascot

At the same time, the 386BSD developers themselves created new project NetBSD, from which, in turn, OpenBSD branched off. As you can see, it turns out a rather sprawling tree of operating systems. The goal of the NetBSD project was to create a UNIX system that could run on as many more architectures, that is, to achieve maximum portability. Even NetBSD drivers need to be cross-platform.

NetBSD Logo

Solaris

However, the SunOS operating system was the first to spin off from BSD, the brainchild, as you understand from the name, of Sun Microsystems, unfortunately, now deceased. This happened in 1983. SunOS is the operating system that came with computers built by Sun itself. Generally speaking, Sun had had the Sun UNIX operating system the year before, in 1982, which was basically based on the Unisoft Unix v7 codebase (Unisoft is a company founded in 1981 that ported Unix to various hardware), but SunOS 1.0 is based on the 4.1 BSD code. SunOS was regularly updated until 1994, when version 4.1.4 was released, and then it was renamed Solaris 2. Where did the deuce come from? This is a bit of a confusing story, because Solaris was first called SunOS versions 4.1.1 - 4.1.4, developed from 1990 to 1994. Consider that it was a kind of rebranding that took root only starting with the Solaris 2 version. Then, until 1997, Solaris 2.1, 2.2, etc. came out. to 2.6, and instead of Solaris 2.7 in 1998, just Solaris 7 was released, then only this figure began to increase. At the moment, the latest version of Solaris is 11, which was released on November 9, 2011.

OpenSolaris Logo

The history of Solaris is also quite complicated, until 2005 Solaris was a completely commercial operating system, but in 2005 Sun decided to open part of the Solaris 10 source code and create the OpenSolaris project. Also, back when Sun was alive, Solaris 10 was either free to use or you could buy official support. Then, in early 2010, when Oracle took over Sun, it made Solaris 10 a paid system. Fortunately, Oracle has not been able to kill OpenSolaris yet.

linux. Where are you without him?

And now it's the turn to talk about the most famous of the implementations of UNIX - Linux. The history of Linux is remarkable in that three interesting projects converged in it at once. But before talking about the creator of Linux - Linus Torvalds, we need to mention two more programmers, one of whom - Andrew Tanenbaum, without knowing it, pushed Linus to create Linux, and the second - Richard Stallman, whose tools Linus used to create his operating system .

Andrew Tanenbaum is a professor at the Free University of Amsterdam and focuses primarily on operating system development. He co-authored, with Albert Woodhull, such a well-known book as Operating Systems: Design and Implementation, which inspired Torvalds to start writing Linux. This book deals with a UNIX-like system such as Minix. Unfortunately, for a long time Tanenbaum considered Minix only as a project for learning how to create operating systems, but not as a full-fledged working OS. The Minix sources had a rather limited license, when you could study its code, but you could not distribute your modified versions of Minix, and for a long time the author himself did not want to apply the patches that were sent to him.

Andrew Tanenbaum

The first version of Minix came out with the first edition of the book in 1987, the subsequent second and third versions of Minix came out with the corresponding editions of the book about operating systems. The third version of Minix, released in 2005, can already be used as a standalone operating system for a computer (there are LiveCD versions of Minix that do not require installation on a hard drive), and as an embedded operating system for microcontrollers. The latest version of Minix 3.2.0 was released in July 2011.

Now let's think about Richard Stallman. Recently, he has been perceived only as a promoter of free software, although many now known programs appeared thanks to him, and Torvalds at one time his project made life much easier. The most interesting thing is that both Linus and Richard approached the creation of the operating system from different angles, and as a result, the projects merged into GNU / Linux. Here it is necessary to give some explanations about what GNU is and where it came from.

Richard Stallman

You can talk about Stallman for quite some time, for example, that he received an honors degree in physics from Harvard University. In addition, Stallman worked at the Massachusetts Institute of Technology, where he began writing his famous EMACS editor in the 1970s. At the same time, the source codes of the editor were available to everyone, which was not some kind of feature at MIT, where for a long time friendly anarchy was held in a sense, or, as Stephen Levy, the author of the wonderful book “Hackers. Heroes of the computer revolution”, “hacker ethics”. But a little later, MIT began to take care of the security of computers, users were given passwords, unauthorized users could not access the computer. Stallman was sharply against this practice, he made a program that could allow anyone to find out any password of any user, he advocated leaving the password blank. For example, he sent messages to users like this: “I see that you have chosen a password [such and such]. I'm assuming you can switch to a "carriage return" password. It's much easier to type, and it's in line with the principle that there shouldn't be passwords here." But his efforts came to nothing. Moreover, the new people who came to MIT have already begun to care about the rights to their program, about copyright and the like abomination.

Stallman later said (quoting from the same book by Levy): “I can't believe that software should have owners. What happened sabotaged the whole of humanity as a whole. It prevented people from getting the most out of the programs.” Or here is another quote from him: “The cars started to break down, and there was no one to fix them. Nobody made the necessary changes in the software. Non-hackers reacted to this simply - they began to use purchased commercial systems, bringing fascism and licensing agreements with them.

As a result, Richard Stallman left MIT and decided to create his own free implementation of a UNIX-compatible operating system. So on September 27, 1983, the GNU project appeared, which translates as "Gnu is Not UNIX". The first program related to GNU was EMACS. As part of the GNU project, in 1988, its own GNU GPL license, the GNU General Public License, was developed, which obliges authors of programs based on source codes distributed under this license to also open source codes under the GPL license.

Until 1990, various software for the future operating system was written within the framework of GNU (not only by Stallman), but this OS did not have its own kernel. The kernel was taken up only in 1990, it was a project called GNU Hurd, but it “did not shoot”, its last version was released in 2009. But "fired" Linux, to which we finally approached.

And then the Finnish boy Linus Torvalds comes into action. While studying at the University of Helsinki, Linus had courses in the C language and the UNIX system, in anticipation of this subject, he bought the very Tanenbaum book that described Minix. Moreover, it was described, Minix itself had to be bought separately on 16 floppy disks, and then it cost $169 (oh, there was no our Gorbushka in Finland then, but what can you do, savages 🙂). In addition, Torvalds had to buy on credit for $ 3500 the computer with the 80386 processor, because before that he had only an old computer on the 68008 processor, on which Minix could not run (fortunately, when he had already made the first version of Linux, grateful users chipped in and paid off his computer loan).

Linus Torvalds

Despite the fact that Torvalds generally liked Minix, but gradually he began to understand what its limitations and disadvantages were. He was especially annoyed by the terminal emulation program that came with the operating system. As a result, he decided to write his own terminal emulator, and at the same time understand the operation of the 386th processor. Torvalds wrote the emulator at a low level, that is, he started with a BIOS bootloader, gradually the emulator acquired new features, then, in order to download files, Linus had to write a floppy drive and file system driver, and off we go. This is how the operating room came into being. Linux system(at that time it did not have any name yet).

When the operating system began to more or less emerge, the first program that Linus ran on it was bash. It would even be more correct to say that he tweaked his operating system so that bash could finally work. After that, he began to gradually launch other programs under his operating system. And the operating system was not supposed to be called Linux at all. Here is a quote from Torvalds' autobiography, which was published under the title "Just for Fun": "Inwardly, I called it Linux. Honestly, I never intended to release it under the name Linux, because it seemed too immodest to me. What name did I prepare for the final version? freax. (Got it? Freaks - fans - and at the end of x from Unix) ".

On August 25, 1991, the following historic message appeared in the comp.os.minix conference: “Hello to all minix users! I'm writing a (free) operating system here (amateur version - it won't be as big and professional as gnu) for the 386's and 486's AT. I've been fiddling with this since April and it looks like it will be ready soon. Let me know what you like/dislike about minix, since my OS is similar to it (among other things, it has - for practical reasons - the same physical layout of the file system). So far, I've ported bash (1.08) and gcc (1.40) to it, and everything seems to work. So, in the coming months, I will have something that works already, and I would like to know what features most people need. All applications are accepted, but execution is not guaranteed :-)"

Please note that GNU and the gcc program are already mentioned here (at that time this abbreviation stood for GNU C Compiler). And remember Stallman and his GNU, who started developing the operating system from the other end. Finally, the merger happened. Therefore, Stallman is offended when the operating system is simply called Linux, and not GNU / Linux, after all, Linux is exactly the kernel, and many of the skins were taken from the GNU project.

On September 17, 1991, Linus Torvalds first posted his operating system to a public FTP server, which at that time had version 0.01. Since then, all progressive mankind has been celebrating this day as the birthday of Linux. Particularly impatient people begin to celebrate it on August 25, when Linus admitted at the conference that he was writing an OS. Then the development of Linux went on, and the name Linux itself became stronger, because the address where the operating system was laid out looked like ftp.funet.fi/pub/OS/Linux. The fact is that Ari Lemke, the teacher who allocated Linus a place on the server, thought that Freax did not look very presentable, and he called the directory “Linux” - like a mixture of the author's name and the “x” at the end of UNIX.

Tux. Linux logo

There is also such a point that although Torvalds wrote Linux under the influence of Minix, there is a fundamental difference between Linux and Minix in terms of programming. The fact is that Tanenbaum is a supporter of microkernel operating systems, that is, those when the operating system has a small kernel with a small number of functions, and all the drivers and services of the operating system act as separate independent modules, while Linux has a monolithic kernel, there many features of the operating system are included, so under Linux, if you need some special feature, you may need to recompile the kernel, making some changes there. On the one hand, the microkernel architecture has advantages - it is reliability and simplicity, at the same time, with careless design of the microkernel, the monolithic kernel will work faster, since it does not need to exchange large amounts of data with third-party modules. After the appearance of Linux, in 1992, a virtual dispute broke out between Torvalds and Tanenbaum, as well as their supporters, at the comp.os.minix conference, which architecture is better - microkernel or monolithic. Tanenbaum argued that microkernel architecture was the future, and Linux was obsolete by the time it came out. Almost 20 years have passed since that day... By the way, GNU Hurd, which was supposed to become the core of the GNU operating system, was also developed as a microkernel.

Mobile Linux

So, since 1991, Linux has been gradually developing, and although on computers ordinary users the share of Linux is not yet large, it has long been popular on servers and supercomputers, and Windows is trying to chop off its share in this area. In addition, Linux is now well positioned on phones and tablets, because Android is also Linux.

Android Logo

The history of Android began with Android Inc, which appeared in 2003, and seemed to be engaged in the development of mobile applications (the specific developments of this company in the first years of its existence are still not particularly advertised). But less than two years later, Android Inc is taken over by Google. It was not possible to find any official details about what exactly the developers of Android Inc were doing before the takeover, although already in 2005, after it was bought by Google, it was rumored that they were already developing a new operating system for phones. However, the first release of Android took place on October 22, 2008, after which new versions began to be released regularly. One of the features of the development of Android could be called the fact that this system began to be attacked over allegedly infringed patents, and the Java implementation is not clear from a legal point of view, but let's not go into these non-technical squabbles.

But Android is not the only mobile representative of Linux, besides it there is also the MeeGo operating system. If behind Android there is such a powerful corporation as Google, then MeeGo does not have one strong trustee, it is developed by the community under the auspices of The Linux Foundation, which is supported by companies such as Intel, Nokia, AMD, Novell, ASUS, Acer, MSI and others. At the moment, the main help comes from Intel, which is not surprising, since the MeeGo project itself grew out of the Moblin project, which was initiated by Intel. Moblin is Linux distribution which was supposed to work for portable devices controlled by the Intel Atom processor. Let's mention another mobile Linux - Openmoko. Linux is quite briskly trying to gain a foothold on phones and tablets, Google has taken Android seriously, the prospects for other mobile versions of Linux are still vague.

As you can see, at the moment Linux can run on many systems controlled by different processors, however, in the early 1990s, Torvalds did not believe that Linux could be ported to somewhere other than the 386th processor.

MacOS X

Now let's switch to another operating system that is also UNIX-compatible - Mac OS X. The first versions of Mac OS, up to the 9th, were not based on UNIX, so we will not dwell on them. The most interesting for us began after the expulsion of Steve Jobs from Apple in 1985, after which he founded the company NeXT, which developed computers and software for them. NeXT got programmer Avetis Tevanyan, who had previously been developing the Mach microkernel for a UNIX-compatible operating system being developed at Carnegie Mellon University. The Mach kernel was to replace the BSD UNIX kernel.

NeXT company logo

Avetis Tevanian was the leader of a team developing a new UNIX compatible operating system called NeXTSTEP. Not to reinvent the wheel, NeXTSTEP was based on the same Mach core. In terms of programming, NeXTSTEP, unlike many other operating systems, was object-oriented, a huge role in it was played by the Objective-C programming language, which is now widely used in Mac OS X. The first version of NeXTSTEP was released in 1989. Despite the fact that NeXTSTEP was originally designed for Motorola 68000 processors, but in the early 1990s, the operating system was ported to 80386 and 80486 processors. Things were not going well for NeXT, and in 1996 Apple offered Jobs to buy NeXT in order to use NeXTSTEP instead of Mac OS. Here one could still talk about the rivalry between the NeXTSTEP and BeOS operating systems, which ended in the victory of NeXTSTEP, but we will not lengthen the already long story, besides, BeOS does not relate to UNIX in any way, so at the moment it does not interest us, although in itself, this operating system was very interesting, and it is a pity that its development was interrupted.

A year later, when Jobs returned to Apple, he continued his policy of adapting NeXTSTEP to Apple computers, and a few years later this operating system was ported to PowerPC and Intel processors. Thus, the server Mac version OS X (Mac OS X Server 1.0) was released in 1999, and in 2001 the operating system for end users, Mac OS X (10.0), was released.

Later, based on Mac OS X, an operating system was developed for iPhone phones, which was called Apple iOS. The first version of iOS was released in 2007. The iPad also runs on the same operating system.

Conclusion

After all of the above, you may have a question, what kind of operating system can be considered UNIX? There is no definite answer to this. From a formal point of view, there is a Single UNIX Specification - a standard that an operating system must satisfy in order to be called UNIX. Do not confuse with the POSIX standard, which can be met by a non-UNIX-like operating system. By the way, the name POSIX was proposed by the same Richard Stallman, and formally the POSIX standard has the ISO / IEC 9945 number. Obtaining a single specification is an expensive and time-consuming business, so not many operating systems are associated with it. Operating systems that have received this certification include Mac OS X, Solaris, SCO, and a few other lesser-known operating systems. This does not include Linux or *BSD, but no one doubts their "Unixness". Therefore, for example, the programmer and writer Eric Raymond proposed two more signs to determine whether this or that operating system is UNIX-like. The first of these features is the "inconsistency" of the source code from the original UNIX developed at AT&T and Bell Labs. This includes BSD systems. The second sign is "UNIX in functionality". This includes operating systems that behave close to what is described in the UNIX specification, but have not received a formal certificate, and, moreover, are not related in any way to the sources of the original UNIX. This includes Linux, Minix, QNX.

On this we, perhaps, will stop, otherwise it turned out and so there are too many letters. This review mainly covered the history of the appearance of the most famous operating systems - variations of BSD, Linux, Mac OS X, Solaris, some more UNIXs, such as QNX, Plan 9, Plan B and some others, were left behind. Who knows, maybe in the future we will remember them again.

MINISTRY OF EDUCATION AND SCIENCE OF THE RUSSIAN

FEDERATION

FEDERAL AGENCY FOR EDUCATION

STATE EDUCATIONAL INSTITUTION

HIGHER PROFESSIONAL EDUCATION

Taganrog State Radio Engineering University

Discipline "Informatics"

"UNIX operating system"

Completed by: Orda-Zhigulina D.V., gr. E-25

Checked: Vishnevetsky V.Yu.

Taganrog 2006

Introduction

What is Unix 3

Where to get free Unix 7

Main part. (Description of Unix)

1. Basic concepts of Unix 8

2. File system 9

2.1 File types 9

3. Command interpreter 11

4. UNIX 12 kernel

4.1 General organization traditional UNIX 13 kernel

4.2 Main functions of the kernel 14

4.3 Principles of interaction with the core 15

4.4 Principles of interrupt handling 17

5. I/O control 18

5.1 Principles of System I/O Buffering 19

5. 2 System Calls for I/O Control 21

6. Interfaces and input points of drivers 23

6.1 Block drivers 23

6.2 Character Drivers 24

6. 3 Stream Drivers 25

7. Commands and Utilities 25

7. 1 Team organization in UNIX OS 26

7.2 I/O redirection and piping 26

7. 3 Built-in, library and user commands 26

7.4 Command language programming 27

8. GUI Tools 27

8.1 User IDs and User Groups 30

8.2 File protection 32

8.3 Promising operating systems supporting the UNIX OS environment 33

Conclusion

Main differences between Unix and other OS 36

Applications of Unix 37

Introduction

What is Unix

The term Unix and the not-quite-equivalent UNIX are used with different meanings. Let's start with the second of the terms, as the simpler one. In a nutshell, UNIX (in that form) is a registered trademark originally owned by the AT&T Corporation, which has changed hands over the years and is now the property of an organization called the Open Group. The right to use the name UNIX is achieved by a kind of "check for lice" - passing tests of compliance with the specifications of some reference OS (Single Unix Standard - which in this case can be translated as the Single Standard on Unix). This procedure is not only complicated, but also very expensive, and therefore only a few operating systems from the current ones have undergone it, and all of them are proprietary, that is, they are the property of certain corporations.

Among the corporations that have earned the right to the name UNIX then developers / testers and the blood (more precisely, the dollar) of the owners, we can name the following:

Sun with its SunOS (better known to the world as Solaris);

IBM, which developed the AIX system;

Hewlett-Packard is the owner of the HP-UX system;

IRIX is SGI's operating system.

In addition, the proper UNIX name applies to systems:

True64 Unix, developed by DEC, with the liquidation of which passed to Compaq, and now, together with the latter, has become the property of the same Hewlett-Packard;

UnixWare is owned by SCO (a product of the merger of Caldera and Santa Cruz Operation).

Being proprietary, all these systems are sold for a lot of money (even by American standards). However, this is not the main obstacle to the spread of UNIX itself. For their common feature is binding to certain hardware platforms: AIX runs on IBM servers and workstations with Power processors, HP-UX on their own HP-PA (Precision Architecture) machines , IRIX - on graphics stations from SGI, carrying MIPS processors,True64 Unix - designed for Alpha processors (unfortunately, in the Bose deceased) Only UnixWare is focused on the "democratic" PC platform, and Solaris exists in versions for two architectures - its own, Sparc, and still the same PC, which, however, did not greatly contribute to their prevalence - due to the relatively weak support for the new PC peripherals.

Thus, UNIX is primarily a legal concept. But the term Unix has a technological interpretation. This is the common name used by the IT industry for the entire family of operating systems, either derived from the "original" UNIX company AT & T, or reproducing its functions "from scratch", including free operating systems such as Linux, FreeBSD and other BSDs, no verification to conform to the Single Unix Standard has never been exposed. That is why they are often called Unix-like.

The term "POSIX-compliant systems", which is close in meaning, is also widely used, which unites a family of operating systems that correspond to the set of standards of the same name. The POSIX (Portable Operation System Interface based on uniX) standards themselves were developed on the basis of practices adopted in Unix systems, and therefore the latter are all, by definition, POSIX-compliant. However, these are not completely synonymous: compatibility with POSIX standards is claimed by operating systems that are only indirectly related to Unix (QNX, Syllable), or not related at all (up to Windows NT/2000/XP).

To clarify the question of the relationship between UNIX, Unix and POSIX, we have to delve a little into history. Actually, the history of this issue is discussed in detail in the corresponding chapter of the book "Free Unix: Linux, FreeBSD and Others" (coming soon by BHV-Petersburg) and in articles on the history of Linux and BSD systems.

The Unix operating system (more precisely, its first version) was developed by employees of Bell Labs (a division of AT & T) in 1969-1971. Its first authors - Ken Thompson and Dennis Ritchie - did it solely for their own purposes, in particular, in order to be able to have fun with their favorite StarTravel game. And for a number of legal reasons, the company itself could not use it as a commercial product. However, the practical application of Unix was found quite quickly. Firstly, it was used at Bell Labs to prepare various kinds of technical (including patent) documentation. And secondly, the UUCP (Unix to Unix Copy Program) communication system was based on Unix.

Another area where Unix was used in the 70s and early 80s of the last century turned out to be quite unusual. Namely, in the source texts, it was distributed among scientific institutions conducting work in the field of Computer Science. The purpose of such dissemination (it was not completely free in the current sense, but in fact turned out to be very liberal) was: education and research in the above field of knowledge.

The most famous is the BSD Unix system, created at the University of Berkeley, California. Which, gradually freeing itself from the proprietary code of the original Unix, eventually, after dramatic ups and downs (described in detail here), gave rise to modern free BSD systems - FreeBSD, NetBSD and others.

One of the most important results of the work of university hackers was (1983) the introduction of support for the TCP / IP protocol in Unix, on which the then ARPANET was based (and which became the foundation of the modern Internet). This was a prerequisite for Unix dominance in all areas related to the World Wide Web. And this turned out to be the next practical application of this family of operating systems - by that time there was no longer any need to talk about a single Unix. Because it, as mentioned earlier, separated its two branches - originating from the original UNIX (over time, it received the name System V) and the system of Berkeley origin. On the other hand, System V formed the basis of those various proprietary UNIXs that, in fact, had the legal right to claim this name.

The latter circumstance - the branching of the once single OS into several lines that are gradually losing compatibility - came into conflict with one of the cornerstones of the Unix ideology: the portability of the system between different platforms, and its applications from one Unix system to another. What brought to life the activities of various kinds of standards organizations, which ended in the end with the creation of the POSIX standards set, which was mentioned earlier.

It was POSIX standards that Linus Torvalds relied on, creating "from scratch" (that is, without using pre-existing code) his operating system - Linux. And she, having quickly and successfully mastered the traditional areas of application of Unix systems (software development, communications, the Internet), eventually opened up a new one for them - general-purpose desktop user platforms. This is what made it popular among the people - a popularity that surpasses that of all other Unix systems combined, both proprietary and free.

What follows is about working on Unix systems in the broadest sense of the word, without taking into account any kind of trademarks and other legal issues. Although the main examples related to working methods will be taken from the field of free implementations of them - Linux, to a lesser extent FreeBSD, and even less - from other BSD systems.

Where to get free Unix?

FreeBSD Database - www.freebsd.org;

You can go to www.sco.com

Main part. (Description of Unix)

1. Basic concepts of Unix

Unix is based on two basic concepts: "process" and "file". Processes are dynamic side systems are subjects; and files - static, these are the objects of the processes. Almost the entire interface between processes interacting with the kernel and with each other looks like writing / reading files. Although you need to add things like signals, shared memory and semaphores.

Processes can be roughly divided into two types - tasks and daemons. A task is a process that does its work, trying to finish it as soon as possible and complete it. The daemon waits for the events it needs to process, processes the events that have occurred, and waits again; it usually ends at the order of another process, most often it is killed by the user by giving the command "kill process_number". In this sense, it turns out that an interactive task that processes user input is more like a daemon than a task.

2. File system

In the old Unix "s, 14 letters were assigned to the name, in the new ones this restriction was removed. In addition to the file name, the directory contains its inode identifier - an integer that determines the number of the block in which the file attributes are recorded. Among them: user number - the owner of the file; number groups Number of references to the file (see below) Date and time of creation, last modification and last access to the file Access attributes Access attributes contain the file type (see below), rights change attributes at startup (see below) and permissions access to it for the owner, classmate and others for reading, writing and executing.The right to delete a file is determined by the right to write to the overlying directory.

Each file (but not a directory) can be known by several names, but they must be on the same partition. All links to the file are equal; the file is deleted when the last link to the file is removed. If the file is open (for reading and/or writing), then the number of links to it increases by one more; this is how many programs that open a temporary file delete it right away so that if they crash, when the operating system closes the files opened by the process, this temporary file will be deleted by the operating system.

There is one more interesting feature file system: if, after the file was created, writing to it was not in a row, but at large intervals, then no disk space is allocated for these intervals. Thus, the total volume of files in a partition can be greater than the volume of the partition, and when such a file is deleted, less space is freed than its size.

2.1 File types

Files are of the following types:

regular direct access file;

directory (file containing names and identifiers of other files);

symbolic link (string with the name of another file);

block device (disk or magnetic tape);

serial device (terminals, serial and parallel ports; disks and tapes also have a serial device interface)

named channel.

Special files designed to work with devices are usually located in the "/dev" directory. Here are some of them (in the FreeBSD nomination):

tty* - terminals, including: ttyv - virtual console;

ttyd - DialIn terminal (usually a serial port);

cuaa - DialOut line

ttyp - network pseudo-terminal;

tty - the terminal with which the task is associated;

wd* - hard drives and their subsections, including: wd - hard drive;

wds - partition of this disk (here called "slice");

wds - partition section;

fd - floppy disk;

rwd*, rfd* - the same as wd* and fd*, but with sequential access;

Sometimes it is required that a program launched by a user does not have the rights of the user who launched it, but some other. In this case, the change rights attribute is set to the rights of the user - the owner of the program. (As an example, I will give a program that reads a file with questions and answers and, based on what it read, tests the student who launched this program. The program must have the right to read the file with answers, but the student who launched it should not.) For example, the passwd program works, with with which the user can change his password. The user can run the passwd program, it can make changes to the system database - but the user cannot.

Unlike DOS, which has a fully qualified filename as "drive:pathname", and RISC-OS, which has it as "-filesystem-drive:$.path.name" (which generally has its advantages), Unix uses transparent notation in the form "/path/name". The root is measured from the partition from which the Unix kernel was loaded. If a different partition needs to be used (and the boot partition usually contains only what is needed to boot), the command `mount /dev/partitionfile dir` is used. At the same time, files and subdirectories that were previously in this directory become inaccessible until the partition is unmounted (naturally, all normal people use empty directories to mount partitions). Only the supervisor has the right to mount and unmount.

At startup, each process can expect to have three files open for it, which it knows as standard input stdin at descriptor 0; standard output stdout on descriptor 1; and standard output stderr on descriptor 2. When logged in, when the user enters a username and password and the shell is started, all three are directed to /dev/tty; later any of them can be redirected to any file.

3. Command interpreter

Unix almost always comes with two shells, sh (shell) and csh (a C-like shell). In addition to them, there are also bash (Bourne), ksh (Korn), and others. Without going into details, here are the general principles:

All commands except changing the current directory, setting environment variables (environment) and operators structured programming - external programs. These programs are usually located in the /bin and /usr/bin directories. System administration programs - in the /sbin and /usr/sbin directories.

The command consists of the name of the program to be started and arguments. Arguments are separated from the command name and from each other by spaces and tabs. Some special characters are interpreted by the shell itself. The special characters are " " ` ! $ ^ * ? | & ; (what else?).

One command line multiple commands can be given. Teams can be split; (sequential command execution), & (asynchronous simultaneous command execution), | (synchronous execution, the stdout of the first command will be fed to the stdin of the second).

You can also take standard input from a file by including "file" (the file will be zeroed out) or ">>file" (the entry will be written to the end of the file) as one of the arguments.

If you need information on any command, issue the command "man command_name". This will be displayed on the screen through the "more" program - see how to manage it on your Unix with the `man more` command.

4. UNIX kernel

Like any other multi-user operating system that protects users from each other and protects system data from any unprivileged user, UNIX has a secure kernel that manages computer resources and provides users with a basic set of services.

The convenience and efficiency of modern versions of the UNIX operating system does not mean that the entire system, including the kernel, is designed and structured in the best possible way. The UNIX OS has evolved over the years (it is the first operating system in history that continues to gain popularity at such a mature age - for more than 25 years). Naturally, the capabilities of the system grew, and, as often happens in large systems, the qualitative improvements in the structure of the UNIX OS did not keep pace with the growth of its capabilities.

As a result, the core of most modern commercial versions of the UNIX operating system is a large, not very well-structured monolith. For this reason, programming at the UNIX kernel level continues to be an art (except for the well-established and understandable technology for developing external device drivers). This lack of manufacturability in the organization of the UNIX kernel does not satisfy many. Hence the desire for a complete reproduction of the UNIX OS environment with a completely different organization of the system.

Due to the greatest prevalence, the UNIX System V kernel is often discussed (it can be considered traditional).

4.1 General organization of the traditional UNIX kernel

One of the main achievements of the UNIX OS is that the system has the property of high mobility. The meaning of this quality is that the entire operating system, including its kernel, is relatively easy to transfer to different hardware platforms. All parts of the system, except for the kernel, are completely machine independent. These components are neatly written in C, and porting them to a new platform (at least in the 32-bit computer class) requires only recompilation of the source codes into the target computer codes.

Of course, the greatest problems are associated with the system kernel, which completely hides the specifics of the computer used, but itself depends on this specifics. As a result of a thoughtful separation of machine-dependent and machine-independent components of the kernel (apparently, from the point of view of operating system developers, this is the highest achievement of the developers of the traditional UNIX OS kernel), it was possible to achieve that the main part of the kernel does not depend on the architectural features of the target platform, is written entirely in C and needs only recompilation to be ported to a new platform.

However, a relatively small part of the kernel is machine dependent and is written in a mixture of C and the target processor's assembly language. When transferring a system to a new platform, this part of the kernel must be rewritten using assembly language and taking into account the specific features of the target hardware. The machine-dependent parts of the kernel are well isolated from the main machine-independent part, and with a good understanding of the purpose of each machine-dependent component, rewriting the machine-specific part is mostly a technical task (although it requires high programming skills).

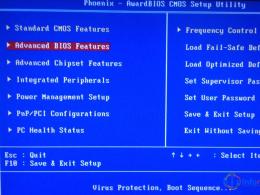

The machine-specific part of the traditional UNIX kernel includes the following components:

promotion and initialization of the system at a low level (so far it depends on the features of the hardware);

primary processing of internal and external interrupts;

memory management (in the part that relates to the features of virtual memory hardware support);

process context switching between user and kernel modes;

target platform specific parts of device drivers.

4.2 Main functions of the kernel

The main functions of the UNIX OS kernel include the following:

(a) System initialization - start-up and spin-up function. The kernel provides a bootstrap tool that loads the full kernel into the computer's memory and starts the kernel.

(b) Process and thread management - the function of creating, terminating and keeping track of existing processes and threads ("processes" running on shared virtual memory). Since UNIX is a multi-process operating system, the kernel provides for the sharing of processor time (or processors in multi-processor systems) and other computer resources between running processes to give the appearance that the processes are actually running in parallel.

(c) Memory management - a function of mapping virtually unlimited virtual memory of processes into physical RAM computer, which has a limited size. The corresponding kernel component provides shared use of the same areas of RAM by several processes using external memory.

(d) File management - a function that implements the abstraction of the file system - hierarchies of directories and files. UNIX file systems support several types of files. Some files may contain ASCII data, others will correspond to external devices. The file system stores object files, executable files, and so on. Files are usually stored on external storage devices; access to them is provided by means of the kernel. There are several types of file system organization in the UNIX world. Modern versions of the UNIX operating system simultaneously support most types of file systems.

(e) Communication means - a function that provides the ability to exchange data between processes running inside the same computer (IPC - Inter-Process Communications), between processes running in different nodes of a local or wide data network, as well as between processes and external device drivers .

(f) Programming interface - a function that provides access to the capabilities of the kernel from the side of user processes based on the mechanism of system calls, arranged in the form of a library of functions.

4.3 Principles of interaction with the core

In any operating system, some mechanism is supported that allows user programs to access the services of the OS kernel. In the operating systems of the most famous Soviet computer BESM-6, the corresponding means of communication with the kernel were called extracodes, in the IBM operating systems they were called system macros, and so on. On UNIX, these tools are called system calls.

The name does not change the meaning, which is that to access the OS kernel functions, "special instructions" of the processor are used, when executed, a special kind of internal processor interrupt occurs, transferring it to the kernel mode (in most modern OS this type of interrupt is called trap - trap). When processing such interrupts (decryption), the OS kernel recognizes that the interrupt is actually a request to the kernel from the user program to perform certain actions, selects the parameters of the call and processes it, and then performs a "return from the interrupt", resuming the normal execution of the user program .

It is clear that the specific mechanisms for raising internal interrupts initiated by the user program differ in different hardware architectures. Since the UNIX OS strives to provide an environment in which user programs can be fully mobile, an additional layer was required to hide the specifics of the specific mechanism for raising internal interrupts. This mechanism is provided by the so-called system call library.

For the user, the system call library is a regular library of pre-implemented functions of the C programming system. When programming in the C language, using any function from the system call library is no different from using any native or library C function. However, inside any function of a particular system call library contains code that is, generally speaking, specific to a given hardware platform.

4.4 Principles of interrupt handling

Of course, the mechanism for handling internal and external interrupts used in operating systems depends mainly on what kind of hardware support for interrupt handling is provided by a particular hardware platform. Fortunately, by now (and for quite some time now) major computer manufacturers have de facto agreed on the basic interrupt mechanisms.

Speaking not very precisely and specifically, the essence of the mechanism adopted today is that each possible interrupt of the processor (whether it be internal or external interrupt) corresponds to some fixed address of physical RAM. At the moment when the processor is allowed to interrupt due to the presence of an internal or external interrupt request, there is a hardware transfer of control to the physical RAM cell with the corresponding address - usually the address of this cell is called the "interrupt vector" (usually, requests for internal interrupt, i.e. i.e. requests coming directly from the processor are satisfied immediately).

The business of the operating system is to place in the appropriate cells of the RAM the program code that provides the initial processing of the interrupt and initiates the full processing.

Basically, the UNIX operating system takes a general approach. In the interrupt vector corresponding to the external interrupt, i.e. interrupt from some external device, contains instructions that set the processor's run level (the run level determines which external interrupts the processor should respond to immediately) and jump to the full interrupt handler in the appropriate device driver. For an internal interrupt (for example, an interrupt initiated by the user program when there is no desired page virtual memory, when an exception occurs in the user program, etc.) or a timer interrupt, the interrupt vector contains a transition to the corresponding UNIX kernel program.

5. I/O control

Traditionally, UNIX OS distinguishes three types of I/O organization and, accordingly, three types of drivers. Block I/O is primarily intended for working with directories and regular files file system, which at the basic level have a block structure. At the user level, it is now possible to work with files by directly mapping them to virtual memory segments. This feature is considered the top level of block I/O. At the lower level, block I/O is supported by block drivers. Block I/O is also supported by system buffering.

Character input/output is used for direct (without buffering) exchanges between the user's address space and the corresponding device. Kernel support common to all character drivers is to provide functions for transferring data between user and kernel address spaces.

Finally, stream I/O is similar to character I/O, but due to the possibility of including intermediate processing modules in the stream, it has much more flexibility.

5.1 Principles of System I/O Buffering

The traditional way to reduce overhead when performing exchanges with external memory devices that have a block structure is block I/O buffering. This means that any block of an external memory device is read first of all into some buffer of the main memory area, called the system cache in UNIX OS, and from there it is completely or partially (depending on the type of exchange) copied to the corresponding user space.

The principles of organizing the traditional buffering mechanism are, firstly, that a copy of the contents of the block is kept in the system buffer until it becomes necessary to replace it due to a lack of buffers (a variation of the LRU algorithm is used to organize the replacement policy). Secondly, when writing any block of an external memory device, only an update (or formation and filling) of the cache buffer is actually performed. The actual exchange with the device is either done by popping the buffer due to its content being replaced, or by issuing a special sync (or fsync) system call, supported specifically for forcibly pushing updated cache buffers to external memory.

This traditional buffering scheme has come into conflict with the controls developed in modern versions of the UNIX OS. virtual memory and especially with the mechanism for mapping files to virtual memory segments. Therefore, System V Release 4 introduced a new buffering scheme, which is currently used in parallel with the old scheme.

The essence of the new scheme is that at the kernel level, the mechanism for mapping files to virtual memory segments is actually reproduced. First, remember that the UNIX kernel does indeed run in its own virtual memory. This memory has a more complex, but fundamentally the same structure as the user's virtual memory. In other words, the virtual memory of the kernel is segment-page, and, along with the virtual memory of user processes, is supported by a common virtual memory management subsystem. It follows, secondly, that practically any function provided by the kernel to users can be provided by some components of the kernel to other components of the kernel. In particular, this also applies to the ability to map files to virtual memory segments.