NVidia CUDA: graphics card computing or CPU death? Nvidia CUDA? non-graphical calculations on GPUs The version of cuda differs in some ways.

A new technology is like a newly emerged evolutionary species. A strange creature, unlike the many old-timers. Sometimes awkward, sometimes funny. And at first, his new qualities seem by no means suitable for this habitable and stable world.

However, a little time passes, and it turns out that the beginner runs faster, jumps higher and generally stronger. And he eats more flies than his retrograde neighbors. And then these very neighbors begin to understand that it is not worth quarreling with this former clumsy one. It is better to be friends with him, and even better to organize a symbiosis. You look, and there will be more flies.

GPGPU (General-Purpose Graphics Processing Units) technology has long existed only in the theoretical calculations of brainy academics. How else? Propose a radical change in the decades-old computing process, entrusting the calculation of its parallel branches to the video card - only theorists are capable of this.

The CUDA technology logo reminds us that it has grown in the depths

3D graphics.

But GPGPU technology was not going to gather dust on the pages of university journals for a long time. Having fluffed out the feathers of her best qualities, she attracted the attention of producers. This is how CUDA, an implementation of GPGPU on NVIDIA's GeForce GPUs, was born.

Thanks to CUDA, GPGPU technologies have become mainstream. And now only the most short-sighted and laziest developer of programming systems does not claim support for CUDA with their product. IT publications were honored to present the details of the technology in numerous plump popular science articles, and competitors urgently sat down at templates and cross compilers to develop something similar.

Public recognition is not only a dream of aspiring starlets, but also of newly emerging technologies. And CUDA got lucky. She is heard, they talk about her and write about her.

They just write as if they continue to discuss GPGPU in thick scientific journals. They bombard the reader with a bunch of terms like "grid", "SIMD", "warp", "host", "textural and constant memory". They immerse it to the very top in nVidia's GPU organization schemes, lead it through winding paths of parallel algorithms, and (the strongest move) show long listings of code in the C language. As a result, it turns out that at the input of the article we have a fresh and burning desire to understand CUDA reader, and at the output - the same reader, but with a swollen head filled with a mess of facts, diagrams, code, algorithms and terms.

Meanwhile, the goal of any technology is to make our life easier. And CUDA does a great job with it. The results of her work are what will convince any skeptic better than hundreds of schemes and algorithms.

Far from everywhere

CUDA supported by high performance supercomputers

NVIDIA Tesla.

And yet, before looking at the results of CUDA's work in the field of making life easier for the average user, it is worth understanding all its limitations. Just like with a genie: any desire, but one. CUDA also has its Achilles heels. One of them is the limitations of the platforms on which it can work.

The list of video cards manufactured by nVidia that support CUDA is presented in a special list called CUDA Enabled Products. The list is quite impressive, but easily classified. CUDA support is not denied:

Models nVidia GeForce 8th, 9th, 100th, 200th and 400th series with a minimum of 256 MB of video memory on board. Support extends to both desktop and mobile cards.

The vast majority of desktop and mobile graphics cards are nVidia Quadro.

All solutions of the nvidia ION netbook series.

High-performance HPC (High Performance Computing) and nVidia Tesla supercomputing solutions used both for personal computing and for organizing scalable cluster systems.

Therefore, before using CUDA-based software products, it is worth checking this list of favorites.

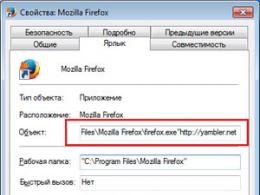

In addition to the graphics card itself, CUDA support requires an appropriate driver. It is he who is the link between the central and graphic processor, acting as a kind of software interface for accessing code and program data to the multi-core treasury of the GPU. In order to be sure not to make a mistake, nVidia recommends visiting the drivers page and getting the most recent version.

...but the process

How does CUDA work? How to explain the complex process of parallel computing on a specific GPU hardware architecture without plunging the reader into an abyss of specific terms?

You can try to do this by imagining how the central processor executes the program in symbiosis with the graphics processor.

Architecturally, the central processing unit (CPU) and its graphic counterpart (GPU) are arranged differently. If we draw an analogy with the world of the automotive industry, then the CPU is a station wagon, one of those that are called "barn". It looks like a passenger car, but at the same time (from the point of view of the developers) "and a Swiss, and a reaper, and a gamer on the pipe." Performs the role of a small truck, bus and hypertrophied hatchback at the same time. Universal, in short. He has few cylinder cores, but they "pull" almost any task, and an impressive cache memory can accommodate a bunch of data.

But the GPU is a sports car. There is only one function: to deliver the pilot to the finish line as quickly as possible. Therefore, no large memory-trunk, no extra seats. But there are hundreds of times more core cylinders than the CPU.

With CUDA, GPGPU developers don't need to understand the complexities of programming.

for graphics engines such as DirectX and OpenGL

Unlike the central processor, which is capable of solving any task, including graphics, but with average performance, the graphics processor is adapted to a high-speed solution of one task: turning heaps of polygons at the input into a bunch of pixels at the output. Moreover, this task can be solved in parallel on hundreds of relatively simple computing cores as part of the GPU.

So what can be a tandem of station wagon and sports car? The work of CUDA goes something like this: the program is executed on the CPU until it contains a piece of code that can be executed in parallel. Then, instead of being slowly executed on two (even eight) cores of the coolest CPU, it is transferred to hundreds of GPU cores. At the same time, the execution time of this section is reduced several times, which means that the execution time of the entire program is also reduced.

Technologically, nothing changes for a programmer. The code of CUDA programs is written in C language. More precisely, in its special dialect "C with streams" (C with streams). Developed at Stanford, this extension of the C language is called Brook. The interface that transmits the Brook code to the GPU is the driver for a video card that supports CUDA. It organizes the entire process of processing this section of the program in such a way that the GPU looks like a CPU coprocessor to the programmer. Much like using a math coprocessor in the early days of personal computers. With the advent of Brook, video cards with CUDA support and drivers for them, any programmer has become able to access the GPU in his programs. But before this shamanism was owned by a narrow circle of the elite, who have been honing programming techniques for DirectX or OpenGL graphics engines for years.

In the barrel of this pretentious honey - CUDA praises - it is worth putting a fly in the ointment, that is, restrictions. Not every task that needs to be programmed is suitable for solving using CUDA. It will not be possible to achieve acceleration in solving routine office tasks, but you can trust CUDA to calculate the behavior of thousands of the same type of fighters in World of Warcraft. But this is a task sucked from the finger. Let's consider examples of what CUDA already solves very effectively.

Righteous works

CUDA is a very pragmatic technology. Having implemented its support in their video cards, nVidia quite rightly expected that the CUDA banner would be picked up by many enthusiasts both in the university environment and in commerce. And so it happened. CUDA-based projects are alive and well.

NVIDIA PhysX

Advertising the next gaming masterpiece, manufacturers often emphasize its 3D realism. But no matter how real the 3D game world is, if the elementary laws of physics, such as gravity, friction, hydrodynamics, are implemented incorrectly, the falsity will be felt instantly.

One of the features of the NVIDIA PhysX physics engine is realistic work with tissues.

Implementing algorithms for computer simulation of basic physical laws is a very laborious task. The most famous companies in this field are the Irish company Havok with its cross-platform physics Havok Physics and the Californian Ageia - the progenitor of the world's first physical processor (PPU - Physics Processing Unit) and the corresponding PhysX physics engine. The first of them, although acquired by Intel, is now actively working in the field of optimizing the Havok engine for ATI video cards and AMD processors. But Ageia with its PhysX engine became part of nVidia. At the same time, nVidia solved the rather difficult task of adapting PhysX to CUDA technology.

This is made possible by statistics. It has been statistically proven that no matter how complex rendering a GPU performs, some of its cores are still idle. It is on these cores that the PhysX engine works.

Thanks to CUDA, the lion's share of calculations related to the physics of the game world began to be performed on the video card. The released power of the central processor was thrown to other tasks of the gameplay. The result was not long in coming. According to experts, the performance gain of the gameplay with PhysX running on CUDA has increased by at least an order of magnitude. The plausibility of the implementation of physical laws has also grown. CUDA takes care of the routine calculation of the implementation of friction, gravity and other things familiar to us for multidimensional objects. Now not only the heroes and their equipment fit perfectly into the laws of the physical world familiar to us, but also dust, fog, blast wave, flame and water.

CUDA version of the NVIDIA Texture Tools 2 texture compression package

Do you like realistic objects in modern games? It is worth saying thanks to the texture developers. But the more reality in the texture, the greater its volume. The more it takes up precious memory. To avoid this, textures are pre-compressed and dynamically decompressed as needed. And compression and decompression are continuous calculations. To work with textures, nVidia has released the NVIDIA Texture Tools package. It supports efficient compression and decompression of DirectX textures (the so-called HFC format). The second version of this package boasts support for the BC4 and BC5 compression algorithms implemented in DirectX 11 technology. But the main thing is that NVIDIA Texture Tools 2 supports CUDA. According to nVidia, this gives a 12-fold increase in performance in the tasks of compression and decompression of textures. And this means that the frames of the gameplay will load faster and delight the player with their realism.

The NVIDIA Texture Tools 2 package is tailored to work with CUDA. The increase in performance when compressing and decompressing textures is evident.

Using CUDA can significantly improve the efficiency of video surveillance.

Real-time video stream processing

Say what you like, but the current world, in terms of espionage, is much closer to the world of Orwellian Big Brother than it seems. The gaze of video cameras is felt by both car drivers and visitors to public places.

Full-flowing rivers of video information flow into the centers of its processing and ... run into a bottleneck - a person. It is he who, in most cases, is the last resort that monitors the video world. Moreover, the agency is not the most efficient. Blinking, distracted and striving to fall asleep.

Thanks to CUDA, it became possible to implement algorithms for simultaneously tracking multiple objects in a video stream. In this case, the process takes place in real time, and the video is full 30 fps. Compared to the implementation of such an algorithm on modern multi-core CPUs, CUDA gives a two- or three-fold increase in performance, and this, you see, is quite a lot.

Video conversion, audio filtering

Badaboom Video Converter is the first to use CUDA to speed up conversion.

It's nice to watch the new video rental in FullHD quality and on the big screen. But you can’t take a big screen with you on the road, and the FullHD video codec will hiccup on the low-powered processor of a mobile gadget. Conversion comes to the rescue. But most of those who have come across it in practice complain about the long conversion time. It is understandable, the process is routine, suitable for parallelization, and its execution on the CPU is not very optimal.

But CUDA copes with it with a bang. The first sign is the Badaboom converter from Elevental. Badaboom developers didn't miscalculate by choosing CUDA. Tests show that a standard hour and a half movie is converted to iPhone/iPod Touch format in less than twenty minutes. And this despite the fact that when using only the CPU, this process takes more than an hour.

Helps CUDA and professional music lovers. Any of them will give half the kingdom for an effective FIR-crossover - a set of filters that divide the sound spectrum into several bands. This process is very time-consuming and, with a large amount of audio material, makes the sound engineer go to “smoke” for several hours. The implementation of the FIR crossover based on CUDA speeds up its work hundreds of times.

CUDA Future

Having made GPGPU technology a reality, CUDA is not going to rest on its laurels. As it happens everywhere, the principle of reflection works in CUDA: now not only the architecture of nVidia video processors influences the development of CUDA SDK versions, but the CUDA technology itself forces nVidia to revise the architecture of its chips. An example of such reflection is the nVidia ION platform. Its second version is specially optimized for solving CUDA tasks. And this means that even in relatively inexpensive hardware solutions, consumers will get all the power and brilliant features of CUDA.

– a set of low-level programming interfaces ( API) for creating games and other high-performance multimedia applications. Includes support for high performance 2D- and 3D-graphics, sound and input devices.

Direct3D (D3D) – 3D output interface primitives(geometric bodies). Included in .

OpenGL(from English. Open Graphics Library, literally - an open graphics library) is a specification that defines a programming language-independent cross-platform programming interface for writing applications that use two-dimensional and three-dimensional computer graphics. Includes over 250 functions for drawing complex 3D scenes from simple primitives. It is used in the creation of video games, virtual reality, visualization in scientific research. On the platform Windows competes with .

OpenCL(from English. Open Computing Language, literally - an open computing language) - framework(framework of a software system) for writing computer programs related to parallel computing on various graphics ( GPU) and ( ). To the framework OpenCL includes a programming language and an application programming interface ( API). OpenCL provides parallelism at the instruction level and at the data level and is the implementation of the technique GPGPU.

GPGPU(abbreviated from English. General-Purpose G raphics P rocessing Units, literally - GPU general purpose) - a technique for using the graphics processor of a video card for general calculations, which is usually carried out.

shader(English) shader) is a program for constructing shadows on synthesized images, used in three-dimensional graphics to determine the final parameters of an object or image. Typically includes descriptions of light absorption and scattering, texture mapping, reflection and refraction, shading, surface displacement, and post-processing effects of arbitrary complexity. Complex surfaces can be rendered using simple geometric shapes.

rendering(English) rendering) - visualization, in computer graphics, the process of obtaining an image from a model using software.

SDK(abbreviated from English. Software Development Kit) is a set of software development tools.

CPU(abbreviated from English. Central Processing Unit, literally - central / main / main computing device) - central (micro); device that executes machine instructions; a piece of hardware that is responsible for performing computational operations (given by the operating system and application software) and coordinating the work of all devices.

GPU(abbreviated from English. Graphic Processing Unit, literally - a graphic computing device) - a graphics processor; a separate device or game console that performs graphics rendering (visualization). Modern GPUs are very efficient at processing and rendering computer graphics realistically. The graphics processor in modern video adapters is used as a 3D graphics accelerator, but in some cases it can also be used for calculations ( GPGPU).

Problems CPU

For a long time, the increase in the performance of traditional ones was mainly due to the sequential increase in the clock frequency (about 80% of the performance was determined by the clock frequency) with a simultaneous increase in the number of transistors on a single chip. However, a further increase in the clock frequency (at a clock frequency of more than 3.8 GHz, the chips simply overheat!) Resists a number of fundamental physical barriers (since the technological process has almost come close to the size of an atom: , and the size of a silicon atom is approximately 0.543 nm):

First, with a decrease in the size of the crystal and with an increase in the clock frequency, the leakage current of transistors increases. This leads to an increase in power consumption and an increase in heat emission;

Second, the benefits of higher clock speeds are offset in part by memory access latencies, as memory access times do not match increasing clock speeds;

Third, for some applications, traditional serial architectures become inefficient as clock speeds increase due to the so-called “Von Neumann bottleneck,” a performance bottleneck resulting from the sequential flow of computation. At the same time, resistive-capacitive signal transmission delays increase, which is an additional bottleneck associated with an increase in the clock frequency.

Development GPU

In parallel with the development of GPU:

…

November 2008 - Intel introduced a line of 4-core Intel Core i7 based on next-generation microarchitecture Nehalem. Processors operate at a clock frequency of 2.6-3.2 GHz. Made in 45nm process technology.

December 2008 - Quad core shipments started AMD Phenom II 940(code name - Deneb). Operates at a frequency of 3 GHz, is produced according to the 45-nm process technology.

…

May 2009 - company AMD introduced the GPU version ATI Radeon HD 4890 with the core clock speed increased from 850 MHz to 1 GHz. This is the first graphic processor running at 1 GHz. The processing power of the chip, due to the increase in frequency, has grown from 1.36 to 1.6 teraflops. The processor contains 800 (!) cores, supports video memory GDDR5, DirectX 10.1, ATI CrossFireX and all other technologies inherent in modern video card models. The chip is made on the basis of 55-nm technology.

Main differences GPU

Distinctive features GPU(compared with ) are:

– an architecture that is maximally aimed at increasing the speed of calculating textures and complex graphic objects;

is the peak power of a typical GPU much higher than ;

– thanks to a dedicated pipeline architecture, GPU much more efficient in processing graphic information than .

"The Crisis of the Genre"

"The Crisis of the Genre" for matured by 2005 - it was then that they appeared. But, despite the development of technology, the growth in the productivity of conventional decreased markedly. At the same time performance GPU continues to grow. So, by 2003, this revolutionary idea crystallized - use the computing power of the graphic. GPUs have become actively used for “non-graphical” computing (physics simulation, signal processing, computational mathematics/geometry, database operations, computational biology, computational economics, computer vision, etc.).

The main problem was that there was no standard interface for programming GPU. The developers used OpenGL or Direct3D but it was very convenient. Corporation NVIDIA(one of the largest manufacturers of graphics, media and communication processors, as well as wireless media processors; founded in 1993) was engaged in the development of some kind of unified and convenient standard - and introduced the technology CUDA.

How it started

2006 - NVIDIA demonstrates CUDA™; the start of a revolution in computing GPU.

2007 - NVIDIA releases architecture CUDA(original version CUDA SDK was presented February 15, 2007); nomination " Best New» from magazine Popular Science and "Readers' Choice" from the publication HPCWire.

2008 - technology NVIDIA CUDA won in the nomination "Technical Excellence" from PC Magazine.

What's happened CUDA

CUDA(abbreviated from English. Compute Unified Device Architecture, literally - a unified computing architecture of devices) - an architecture (a set of software and hardware) that allows you to produce on GPU general purpose computations GPU actually acts as a powerful coprocessor.

Technology NVIDIA CUDA™ is the only development environment in a programming language C, which allows developers to create software to solve complex computational problems in less time, thanks to the processing power of GPUs. Millions already work in the world GPU with the support CUDA, and thousands of programmers are already using (for free!) tools CUDA to accelerate applications and solve the most complex resource-intensive tasks - from video and audio encoding to oil and gas exploration, product modeling, medical imaging and scientific research.

CUDA gives the developer the opportunity, at his own discretion, to organize access to the instruction set of the graphics accelerator and manage its memory, organize complex parallel calculations on it. Supported graphics accelerator CUDA becomes a powerful programmable open architecture like today's . All this provides the developer with low-level, distributed and high-speed access to the equipment, making CUDA a necessary basis for building serious high-level tools such as compilers, debuggers, mathematical libraries, software platforms.

Uralsky, Lead Technology Specialist NVIDIA, comparing GPU and , says like this: - It's an SUV. He travels always and everywhere, but not very fast. A GPU is a sports car. On a bad road, he simply will not go anywhere, but give a good coverage - and he will show all his speed, which the SUV never dreamed of! ..».

Technology Capabilities CUDA

Devices for turning personal computers into small supercomputers have been known for a long time. Back in the 80s of the last century, so-called transputers were offered on the market, which were inserted into the then common ISA expansion slots. At first, their performance in the corresponding tasks was impressive, but then the growth in performance of universal processors accelerated, they strengthened their position in parallel computing, and there was no point in transputers. Although such devices still exist, they are a variety of specialized accelerators. But often the scope of their application is narrow and such accelerators are not widely used.

But recently, the baton of parallel computing has moved to the mass market, one way or another connected with three-dimensional games. General-purpose devices with multi-core processors for parallel vector computing used in 3D graphics achieve high peak performance that general-purpose processors cannot. Of course, the maximum speed is achieved only in a number of convenient tasks and has some limitations, but such devices have already begun to be widely used in areas for which they were not originally intended. great example Such a parallel processor is the Cell processor developed by the Sony-Toshiba-IBM alliance and used in the Sony PlayStation 3 game console, as well as all modern video cards from market leaders - Nvidia and AMD.

We will not touch Cell today, although it appeared earlier and is a universal processor with additional vector capabilities, we are not talking about it today. For 3D video accelerators, the first general-purpose non-graphical computation technologies GPGPU (General-Purpose computation on GPUs) appeared several years ago. After all, modern video chips contain hundreds of mathematical execution units, and this power can be used to significantly speed up many computationally intensive applications. And the current generations of GPUs have a sufficiently flexible architecture that, together with high-level programming languages and hardware-software architectures like the one discussed in this article, opens up these possibilities and makes them much more accessible.

The creation of the GPCPU was prompted by the emergence of sufficiently fast and flexible shader programs that are capable of executing modern video chips. The developers decided to make the GPU calculate not only the image in 3D applications, but also be used in other parallel calculations. In the GPGPU, graphics APIs were used for this: OpenGL and Direct3D, when data was transmitted to the video chip in the form of textures, and calculation programs loaded as shaders. The disadvantages of this method are the relatively high complexity of programming, low speed data exchange between the CPU and GPU and other restrictions, which we will discuss later.

GPU computing has evolved and is evolving very rapidly. And further on, two major video chip manufacturers, Nvidia and AMD, developed and announced respective platforms called CUDA (Compute Unified Device Architecture) and CTM (Close To Metal or AMD Stream Computing), respectively. Unlike previous GPU programming models, these were done with direct access to the hardware capabilities of the graphics cards. The platforms are not compatible with each other, CUDA is an extension of the C programming language, and CTM is virtual machine, executing assembly code. But both platforms have eliminated some of the important limitations of previous GPGPU models using the traditional graphics pipeline and the corresponding Direct3D or OpenGL interfaces.

Of course, open standards that use OpenGL seem to be the most portable and universal, they allow you to use the same code for video chips from different manufacturers. But such methods have a lot of disadvantages, they are much less flexible and not as convenient to use. In addition, they prevent the use of the specific features of certain video cards, such as the fast shared (shared) memory present in modern computing processors.

That is why Nvidia released the CUDA platform, a C-like programming language with its own compiler and libraries for GPU computing. Of course, writing the optimal code for video chips is not at all that easy and this task requires long manual work, but CUDA just reveals all the possibilities and gives the programmer more control over the hardware capabilities of the GPU. It is important that Nvidia CUDA support is available for the G8x, G9x and GT2xx chips used in Geforce 8, 9 and 200 series video cards, which are very widespread. The final version of CUDA 2.0 has now been released, which has some new features, such as support for double precision calculations. CUDA is available on 32-bit and 64-bit Linux, Windows, and MacOS X operating systems.

Difference between CPU and GPU in Parallel Computing

The growth of the frequencies of universal processors ran into physical limitations and high power consumption, and their performance is increasingly increasing due to the placement of several cores in a single chip. Processors sold now contain only up to four cores (further growth will not be fast) and they are intended for general applications, use MIMD - multiple instruction and data flow. Each core operates separately from the others, executing different instructions for different processes.

Specialized vector capabilities (SSE2 and SSE3) for 4-component (single-precision floating-point) and two-component (double-precision) vectors have appeared in general-purpose processors due to the increased demands of graphics applications in the first place. That is why for certain tasks the use of GPUs is more profitable, because they were originally made for them.

For example, in Nvidia video chips, the main unit is a multiprocessor with eight to ten cores and hundreds of ALUs in total, several thousand registers and a small amount of shared shared memory. In addition, the video card contains fast global memory with access to it by all multiprocessors, local memory in each multiprocessor, as well as special memory for constants.

Most importantly, these multiple multiprocessor cores in the GPU are SIMD (single instruction stream, multiple data stream) cores. And these cores execute the same instructions at the same time, this style of programming is common in graphics algorithms and many scientific tasks, but requires specific programming. But this approach allows you to increase the number of execution units due to their simplification.

So, let's list the main differences between CPU and GPU architectures. CPU cores are designed to execute a single stream of sequential instructions at maximum performance, while GPUs are designed to quickly execute large numbers of parallel instruction streams. General purpose processors are optimized to achieve high performance on a single instruction stream that processes both integers and floating point numbers. The memory access is random.

CPU designers try to get as many instructions as possible to execute in parallel in order to increase performance. To do this, starting with the Intel Pentium processors, superscalar execution appeared, providing the execution of two instructions per clock, and the Pentium Pro distinguished itself by out-of-order execution of instructions. But the parallel execution of a sequential stream of instructions has certain basic limitations, and by increasing the number of execution units, a multiple increase in speed cannot be achieved.

Video chips have a simple and parallel operation from the very beginning. The video chip takes a group of polygons at the input, performs all the necessary operations, and produces pixels at the output. Processing of polygons and pixels is independent, they can be processed in parallel, separately from each other. Therefore, due to the inherently parallel organization of work in the GPU, a large number of execution units are used, which are easy to load, in contrast to the sequential flow of instructions for the CPU. In addition, modern GPUs can also execute more than one instruction per clock (dual issue). Thus, the Tesla architecture, under certain conditions, launches the MAD+MUL or MAD+SFU operations simultaneously.

The GPU differs from the CPU also in terms of the principles of memory access. In the GPU, it is connected and easily predictable - if a texture texel is read from memory, then after a while the time will come for neighboring texels. Yes, and when recording the same - the pixel is written to the framebuffer, and after a few cycles, the one located next to it will be recorded. Therefore, the memory organization is different from that used in the CPU. And the video chip, unlike universal processors, simply does not need a large cache memory, and textures require only a few (up to 128-256 in current GPUs) kilobytes.

And in itself, the work with memory for the GPU and CPU is somewhat different. So, not all CPUs have built-in memory controllers, and all GPUs usually have several controllers, up to eight 64-bit channels in the Nvidia GT200 chip. In addition, video cards use faster memory, and as a result, video chips have many times more memory bandwidth available, which is also very important for parallel calculations that operate with huge data streams.

In general-purpose processors, large numbers of transistors and chip area go to instruction buffers, hardware branch prediction, and huge amounts of on-chip cache memory. All these hardware blocks are needed to speed up the execution of a few instruction streams. Video chips spend transistors on arrays of execution units, flow control units, small shared memory, and multi-channel memory controllers. The above does not speed up the execution of individual threads, it allows the chip to process several thousand threads that are simultaneously executing on the chip and require high memory bandwidth.

About differences in caching. General purpose CPUs use cache to increase performance by reducing memory access latency, while GPUs use cache or shared memory to increase bandwidth. CPUs reduce memory access latencies with large caches and code branch prediction. These hardware pieces take up most of the chip area and consume a lot of power. Video chips get around the problem of memory access delays by simultaneously executing thousands of threads - while one of the threads is waiting for data from memory, the video chip can perform calculations of another thread without waiting and delays.

There are many differences in multithreading support as well. The CPU executes 1-2 computation threads per processor core, and video chips can support up to 1024 threads per multiprocessor, of which there are several in the chip. And if switching from one thread to another for the CPU costs hundreds of cycles, then the GPU switches several threads in one cycle.

In addition, CPUs use SIMD (single instruction, multiple data) blocks for vector computing, and GPUs use SIMT (single instruction, multiple threads) for scalar thread processing. SIMT does not require the developer to convert data to vectors and allows arbitrary branching in streams.

In short, we can say that, unlike modern universal CPUs, video chips are designed for parallel computing with a large number of arithmetic operations. And a much larger number of GPU transistors work for their intended purpose - the processing of data arrays, and do not control the execution (flow control) of a few sequential computational threads. This is a diagram of how much space in the CPU and GPU takes a variety of logic:

As a result, the basis for the effective use of the power of the GPU in scientific and other non-graphical calculations is the parallelization of algorithms into hundreds of execution units available in video chips. For example, many applications of molecular modeling are well suited for calculations on video chips, they require large computing power and are therefore convenient for parallel computing. And the use of multiple GPUs provides even more computing power for solving such problems.

Performing calculations on the GPU shows excellent results in algorithms that use parallel data processing. That is, when the same sequence of mathematical operations is applied to a large amount of data. In this case, the best results are achieved if the ratio of the number of arithmetic instructions to the number of memory accesses is large enough. This places less demands on flow control, and the high density of math and large amount of data eliminates the need for large caches, as on the CPU.

As a result of all the differences described above, the theoretical performance of video chips significantly exceeds the performance of the CPU. Nvidia provides the following graph of CPU and GPU performance growth over the past few years:

Naturally, these data are not without a share of slyness. Indeed, on the CPU it is much easier to achieve theoretical figures in practice, and the figures are given for single precision in the case of the GPU, and for double precision in the case of the CPU. In any case, single-precision is enough for some parallel tasks, and the difference in speed between universal and graphic processors is very large, and therefore the game is worth the candle.

The first attempts to apply calculations on the GPU

Video chips have been used in parallel mathematical calculations for a long time. The very first attempts at such an application were extremely primitive and limited to the use of some hardware features, such as rasterization and Z-buffering. But in the current century, with the advent of shaders, they began to speed up the calculation of matrices. In 2003, at SIGGRAPH, a separate section was allocated for GPU computing, and it was called GPGPU (General-Purpose computation on GPU) - universal GPU computing).

The best known BrookGPU is the Brook stream programming language compiler, designed to perform non-graphical computations on the GPU. Before its appearance, developers using the capabilities of video chips for calculations chose one of two common APIs: Direct3D or OpenGL. This seriously limited the use of the GPU, because 3D graphics use shaders and textures that parallel programmers are not required to know about, they use threads and cores. Brook was able to help make their task easier. These streaming extensions to the C language, developed at Stanford University, hid the 3D API from programmers and presented the video chip as a parallel coprocessor. The compiler parsed a .br file with C++ code and extensions, producing code linked to a DirectX, OpenGL, or x86-enabled library.

Naturally, Brook had many shortcomings, which we dwell on and which we will discuss in more detail later. But even just its appearance caused a significant surge of attention of the same Nvidia and ATI to the GPU computing initiative, since the development of these capabilities seriously changed the market in the future, opening up a whole new sector of it - parallel computing based on video chips.

Further, some researchers from the Brook project joined the Nvidia development team to introduce a hardware-software parallel computing strategy, opening up a new market share. And the main advantage of this Nvidia initiative was that the developers perfectly know all the capabilities of their GPUs to the smallest detail, and there is no need to use the graphics API, and you can work with the hardware directly using the driver. The result of this team's efforts is Nvidia CUDA (Compute Unified Device Architecture), a new hardware and software architecture for parallel computing on the Nvidia GPU, which is the subject of this article.

Areas of application of parallel computations on the GPU

To understand what advantages the transfer of calculations to video chips brings, we will present the average figures obtained by researchers around the world. On average, when transferring calculations to the GPU, in many tasks acceleration is achieved by 5-30 times compared to fast universal processors. The biggest numbers (of the order of 100x speedup and even more!) are achieved on code that is not very well suited for calculations using SSE blocks, but is quite convenient for the GPU.

These are just some examples of speedups of synthetic code on the GPU versus SSE vectorized code on the CPU (according to Nvidia):

- Fluorescence microscopy: 12x;

- Molecular dynamics (non-bonded force calc): 8-16x;

- Electrostatics (direct and multi-level Coulomb summation): 40-120x and 7x.

And this is a plate that Nvidia loves very much, showing it at all presentations, which we will dwell on in more detail in the second part of the article, devoted to specific examples of practical applications of CUDA computing:

As you can see, the numbers are very attractive, especially the 100-150-fold gains are impressive. In the next CUDA article, we'll take a closer look at some of these numbers. And now we list the main applications in which GPU computing is now used: analysis and processing of images and signals, physics simulation, computational mathematics, computational biology, financial calculations, databases, dynamics of gases and liquids, cryptography, adaptive radiation therapy, astronomy, processing sound, bioinformatics, biological simulations, computer vision, data mining, digital cinema and television, electromagnetic simulations, geographic information systems, military applications, mining planning, molecular dynamics, magnetic resonance imaging (MRI), neural networks, oceanographic research, particle physics, protein folding simulation, quantum chemistry, ray tracing, imaging, radar, reservoir simulation, artificial intelligence, satellite data analysis, seismic exploration, surgery, ultrasound, video conferencing.

Details of many applications can be found on the Nvidia website in the section on . As you can see, the list is quite large, but that's not all! It can be continued, and it can certainly be assumed that in the future other areas of application of parallel calculations on video chips will be found, which we still have no idea about.

Nvidia CUDA Capabilities

CUDA technology is Nvidia's software and hardware computing architecture based on an extension of the C language, which makes it possible to access the instruction set of a graphics accelerator and manage its memory in parallel computing. CUDA helps to implement algorithms that can be implemented on graphic processors of Geforce video accelerators of the eighth generation and older (Geforce 8, Geforce 9, Geforce 200 series), as well as Quadro and Tesla.

Although the complexity of GPU programming with CUDA is quite high, it is lower than with early GPGPU solutions. Such programs require partitioning of the application across multiple multiprocessors similar to MPI programming, but without sharing the data that is stored in the shared video memory. And since CUDA programming for each multiprocessor is similar to OpenMP programming, it requires a good understanding of memory organization. But, of course, the complexity of developing and porting to CUDA is highly dependent on the application.

The developer kit contains many code examples and is well documented. The learning process will take about two to four weeks for those already familiar with OpenMP and MPI. The API is based on the extended C language, and to translate code from this language, the CUDA SDK includes the nvcc command-line compiler, based on the open Open64 compiler.

We list the main characteristics of CUDA:

- unified software and hardware solution for parallel computing on Nvidia video chips;

- a wide range of supported solutions, from mobile to multi-chip

- the standard C programming language;

- standard libraries for numerical analysis FFT (Fast Fourier Transform) and BLAS (Linear Algebra);

- optimized data exchange between CPU and GPU;

- interaction with graphics API OpenGL and DirectX;

- support for 32- and 64-bit operating systems: Windows XP, Windows Vista, Linux and MacOS X;

- the ability to develop at a low level.

Regarding the support of operating systems, it should be added that all major Linux distributions(Red Hat Enterprise Linux 3.x/4.x/5.x, SUSE Linux 10.x), but according to enthusiasts, CUDA works fine on other builds: Fedora Core, Ubuntu, Gentoo, etc.

The CUDA Development Environment (CUDA Toolkit) includes:

- nvcc compiler;

- FFT and BLAS libraries;

- profiler;

- gdb debugger for GPU;

- CUDA runtime driver included with standard Nvidia drivers

- programming manual;

- CUDA Developer SDK (source code, utilities and documentation).

In the examples source code: parallel bitonic sort, matrix transposition, parallel prefix summation of large arrays, image convolution, discrete wavelet transform, example of interaction with OpenGL and Direct3D, use of CUBLAS and CUFFT libraries, option price calculation (Black-Scholes formula, binomial model , Monte Carlo method), Mersenne Twister parallel random number generator, large array histogram calculation, noise reduction, Sobel filter (edge finding).

Benefits and Limitations of CUDA

From a programmer's point of view, the graphics pipeline is a set of processing stages. The geometry block generates triangles, and the rasterization block generates pixels that are displayed on the monitor. The traditional GPGPU programming model is as follows:

To transfer computations to the GPU within the framework of such a model, a special approach is needed. Even element-by-element addition of two vectors will require drawing the shape to the screen or to an off-screen buffer. The figure is rasterized, the color of each pixel is calculated according to a given program (pixel shader). The program reads the input data from the textures for each pixel, adds them up, and writes them to the output buffer. And all these numerous operations are needed for what is written in a single operator in a conventional programming language!

Therefore, the use of GPGPU for general purpose computing has a limitation in the form of too much complexity for developers to learn. And there are enough other restrictions, because a pixel shader is just a formula for the dependence of the final color of a pixel on its coordinates, and the pixel shader language is a language for writing these formulas with a C-like syntax. The early GPGPU methods are a clever trick to harness the power of the GPU, but without any convenience. The data there is represented by images (textures), and the algorithm is represented by a rasterization process. It should be noted and a very specific model of memory and execution.

Nvidia's hardware and software architecture for computing on GPUs from Nvidia differs from previous GPGPU models in that it allows writing programs for GPUs in real C with standard syntax, pointers, and the need for a minimum of extensions to access the computing resources of video chips. CUDA does not depend on graphics APIs, and has some features designed specifically for general purpose computing.

Advantages of CUDA over the traditional approach to GPGPU computing:

- the CUDA application programming interface is based on the standard C programming language with extensions, which simplifies the process of learning and implementing the CUDA architecture;

- CUDA provides access to 16 KB of shared memory per multiprocessor, which can be used to organize a cache with a higher bandwidth than texture fetches;

- more efficient data transfer between system and video memory

- no need for graphics APIs with redundancy and overhead;

- linear memory addressing, and gather and scatter, the ability to write to arbitrary addresses;

- hardware support for integer and bit operations.

Main limitations of CUDA:

- lack of recursion support for executable functions;

- the minimum block width is 32 threads;

- proprietary CUDA architecture owned by Nvidia.

The weaknesses of programming with previous GPGPU methods are that these methods do not use vertex shader execution units in previous non-unified architectures, data is stored in textures and output to an off-screen buffer, and multi-pass algorithms use pixel shader units. GPGPU limitations include: insufficiently efficient use of hardware capabilities, memory bandwidth limitations, no scatter operation (only gather), mandatory use of the graphics API.

The main advantages of CUDA over previous GPGPU methods stem from the fact that this architecture is designed to efficiently use non-graphics computing on the GPU and uses the C programming language without requiring algorithms to be ported to a form convenient for the concept of the graphics pipeline. CUDA offers a new GPU computing path that does not use graphics APIs, offering random memory access (scatter or gather). Such an architecture is free from the disadvantages of GPGPU and uses all the execution units, and also expands the capabilities through integer mathematics and bit shift operations.

In addition, CUDA opens up some hardware features not available from the graphics APIs, such as shared memory. This is a small amount of memory (16 kilobytes per multiprocessor) that blocks of threads have access to. It allows you to cache the most frequently accessed data and can provide faster performance than using texture fetches for this task. This, in turn, reduces the throughput sensitivity of parallel algorithms in many applications. For example, it is useful for linear algebra, fast Fourier transform, and image processing filters.

More convenient in CUDA and memory access. The code in the graphics API outputs data as 32 single-precision floating-point values (RGBA values simultaneously to eight render targets) in predefined areas, and CUDA supports scatter recording - an unlimited number of records at any address. Such advantages make it possible to execute some algorithms on the GPU that cannot be efficiently implemented using GPGPU methods based on the graphics API.

Also, graphical APIs necessarily store data in textures, which requires prior packing of large arrays into textures, which complicates the algorithm and forces the use of special addressing. And CUDA allows you to read data at any address. Another advantage of CUDA is the optimized communication between CPU and GPU. And for developers who want to access the low level (for example, when writing another programming language), CUDA offers the possibility of low-level assembly language programming.

History of CUDA development

CUDA development was announced along with the G80 chip in November 2006, and the release public beta The CUDA SDK took place in February 2007. Version 1.0 was released in June 2007 to launch Tesla solutions based on the G80 chip for the high performance computing market. Then, at the end of the year, CUDA 1.1 beta was released, which, despite a slight increase in version number, introduced quite a lot of new things.

From what appeared in CUDA 1.1, we can note the inclusion of CUDA functionality in regular Nvidia video drivers. This meant that in the requirements for any CUDA program, it was enough to specify a Geforce 8 series video card and higher, as well as the minimum driver version 169.xx. This is very important for developers, if these conditions are met, CUDA programs will work for any user. Also, asynchronous execution was added along with data copying (only for G84, G86, G92 and higher chips), asynchronous transfer of data to video memory, atomic memory access operations, support for 64-bit versions of Windows, and the possibility of multi-chip CUDA operation in SLI mode.

At the moment, the version for solutions based on the GT200 is CUDA 2.0, which was released along with the Geforce GTX 200 line. The beta version was released back in the spring of 2008. The second version has: support for double precision calculations (hardware support only for GT200), Windows Vista (32 and 64-bit versions) and Mac OS X are finally supported, debugging and profiling tools have been added, 3D textures are supported, optimized data transfer.

As for calculations with double precision, their speed on the current hardware generation is several times lower than single precision. The reasons are discussed in ours. The implementation of this support in the GT200 lies in the fact that FP32 blocks are not used to get results at a four times slower pace, to support FP64 calculations, Nvidia decided to make dedicated computing blocks. And in GT200 there are ten times less of them than FP32 blocks (one double precision block for each multiprocessor).

In reality, the performance can be even lower, since the architecture is optimized for 32-bit reading from memory and registers, in addition, double precision is not needed in graphics applications, and in the GT200 it is made more likely to just be. Yes, and modern quad-core processors show not much less real performance. But being even 10 times slower than single precision, this support is useful for mixed precision circuits. One common technique is to get initially approximate results in single precision, and then refine them in double precision. Now this can be done directly on the video card, without sending intermediate data to the CPU.

Another useful feature of CUDA 2.0 has nothing to do with the GPU, oddly enough. It's just now possible to compile CUDA code into highly efficient multi-threaded SSE code for fast execution on the CPU. That is, now this feature is suitable not only for debugging, but also real use on systems without an Nvidia graphics card. After all, the use of CUDA in normal code is constrained by the fact that Nvidia video cards, although the most popular among dedicated video solutions, are not available in all systems. And before version 2.0, in such cases, two different codes would have to be written: for CUDA and separately for the CPU. And now you can run any CUDA program on the CPU with high efficiency, albeit at a lower speed than on video chips.

Nvidia CUDA Supported Solutions

All CUDA-enabled graphics cards can help accelerate most demanding tasks, from audio and video processing to medical and scientific research. The only real limitation is that many CUDA programs require a minimum of 256 megabytes of video memory, and this is one of the most important specifications for CUDA applications.

An up-to-date list of CUDA-enabled products can be found at . At the time of this writing, CUDA calculations supported all products of the Geforce 200, Geforce 9 and Geforce 8 series, including mobile products, starting with Geforce 8400M, as well as Geforce 8100, 8200 and 8300 chipsets. Modern Quadro and all Tesla: S1070, C1060, C870, D870 and S870.

We especially note that along with the new Geforce GTX 260 and 280 video cards, the corresponding high-performance computing solutions were announced: Tesla C1060 and S1070 (shown in the photo above), which will be available for purchase this fall. The same GPU is used in them - GT200, in C1060 it is one, in S1070 - four. But, unlike gaming solutions, they use four gigabytes of memory per chip. Of the minuses, perhaps the lower memory frequency and memory bandwidth than gaming cards, providing 102 gigabytes / s per chip.

Composition of Nvidia CUDA

CUDA includes two APIs: high-level (CUDA Runtime API) and low-level (CUDA Driver API), although it is impossible to use both at the same time in one program, you must use one or the other. The high-level one works "on top" of the low-level one, all runtime calls are translated into simple instructions processed by the low-level Driver API. But even the “high-level” API assumes knowledge about the design and operation of Nvidia video chips; there is no too high level of abstraction there.

There is another level, even higher - two libraries:

CUBLAS- CUDA version of BLAS (Basic Linear Algebra Subprograms), designed for computing linear algebra problems and using direct access to GPU resources;

CUFFT- CUDA version of the Fast Fourier Transform library for calculating the Fast Fourier Transform, widely used in signal processing. The following transformation types are supported: complex-complex (C2C), real-complex (R2C), and complex-real (C2R).

Let's take a closer look at these libraries. CUBLAS are standard linear algebra algorithms translated into the CUDA language, at the moment only a certain set of basic CUBLAS functions are supported. The library is very easy to use: you need to create a matrix and vector objects in the video memory, fill them with data, call the required CUBLAS functions, and load the results from the video memory back into the system memory. CUBLAS contains special functions for creating and destroying objects in GPU memory, as well as for reading and writing data to this memory. Supported BLAS functions: levels 1, 2 and 3 for real numbers, level 1 CGEMM for complex. Level 1 is vector-vector operations, level 2 is vector-matrix operations, level 3 is matrix-matrix operations.

CUFFT - CUDA variant of the Fast Fourier Transform - widely used and very important in signal analysis, filtering, etc. CUFFT provides a simple interface for efficient FFT computation on Nvidia GPUs without the need to develop custom FFT for the GPU. CUDA FFT variant supports 1D, 2D, and 3D transformations of complex and real data, batch execution for multiple 1D transformations in parallel, sizes of 2D and 3D transformations can be within , for 1D a size of up to 8 million elements is supported.

Fundamentals of creating programs on CUDA

To understand the text below, you should understand the basic architectural features of Nvidia video chips. The GPU consists of several clusters of texture units (Texture Processing Cluster). Each cluster consists of an enlarged block of texture fetches and two or three streaming multiprocessors, each of which consists of eight computing devices and two superfunctional blocks. All instructions are executed according to the SIMD principle, when one instruction is applied to all threads in a warp (a term from the textile industry, in CUDA this is a group of 32 threads - the minimum amount of data processed by multiprocessors). This execution method was called SIMT (single instruction multiple threads - one instruction and many threads).

Each of the multiprocessors has certain resources. So, there is a special shared memory with a capacity of 16 kilobytes per multiprocessor. But this is not a cache, since the programmer can use it for any need, similar to the Local Store in the SPU of Cell processors. This shared memory allows information to be exchanged between threads of the same block. It is important that all threads of one block are always executed by the same multiprocessor. And threads from different blocks cannot exchange data, and you need to remember this limitation. Shared memory is often useful, except when multiple threads access the same memory bank. Multiprocessors can also access video memory, but with higher latency and poorer bandwidth. To speed up access and reduce the frequency of accessing video memory, multiprocessors have 8 kilobytes of cache for constants and texture data.

The multiprocessor uses 8192-16384 (for G8x/G9x and GT2xx, respectively) registers common to all threads of all blocks executing on it. The maximum number of blocks per multiprocessor for the G8x/G9x is eight, and the number of warps is 24 (768 threads per multiprocessor). In total, the top video cards of the Geforce 8 and 9 series can process up to 12288 threads at a time. The GeForce GTX 280 based on the GT200 offers up to 1024 threads per multiprocessor, it has 10 clusters of three multiprocessors processing up to 30720 threads. Knowing these limitations allows you to optimize algorithms for available resources.

The first step in porting an existing application to CUDA is profiling it and identifying areas of code that are bottlenecks that slow down work. If among such sections there are suitable ones for fast parallel execution, these functions are transferred to C and CUDA extensions for execution on the GPU. The program is compiled using the Nvidia-supplied compiler, which generates code for both the CPU and the GPU. When a program is executed, the CPU executes its portions of the code, and the GPU executes the CUDA code with the heaviest parallel computations. This part, designed for the GPU, is called the kernel (kernel). The kernel defines the operations to be performed on the data.

The video chip receives the core and creates copies for each data element. These copies are called threads. A stream contains a counter, registers, and state. For large amounts of data, such as image processing, millions of threads are launched. Threads run in groups of 32 called warps. Warps are assigned to run on specific streaming multiprocessors. Each multiprocessor consists of eight cores - stream processors that execute one MAD instruction per clock cycle. To execute one 32-thread warp, four multiprocessor cycles are required (we are talking about the shader domain frequency, which is 1.5 GHz and higher).

The multiprocessor is not a traditional multi-core processor, it is well suited for multi-threading, supporting up to 32 warps at a time. Each clock cycle, the hardware chooses which of the warps to execute, and switches from one to another without losing cycles. If we draw an analogy with the central processor, this is like executing 32 programs at the same time and switching between them every clock cycle without the loss of a context switch. In reality, the CPU cores support the simultaneous execution of one program and switch to others with a delay of hundreds of cycles.

CUDA Programming Model

Again, CUDA uses a parallel computing model, where each of the SIMD processors executes the same instruction on different data items in parallel. The GPU is a computing device, a coprocessor (device) for the central processor (host), which has its own memory and processes a large number of threads in parallel. The kernel (kernel) is a function for the GPU, executed by threads (an analogy from 3D graphics - a shader).

We said above that a video chip differs from a CPU in that it can process tens of thousands of threads simultaneously, which is usually for graphics that are well parallelized. Each stream is scalar, does not require data to be packed into 4-component vectors, which is more convenient for most tasks. The number of logical threads and thread blocks exceeds the number of physical execution units, which gives good scalability for the entire range of company solutions.

The programming model in CUDA assumes thread grouping. Threads are combined into thread blocks - one-dimensional or two-dimensional grids of threads interacting with each other using shared memory and synchronization points. The program (kernel) is executed over a grid of thread blocks, see the figure below. One grid is executed at the same time. Each block can be one-, two-, or three-dimensional in shape, and may consist of 512 threads on current hardware.

Thread blocks run in small groups called warps, which are 32 threads in size. This is the minimum amount of data that can be processed in multiprocessors. And since this is not always convenient, CUDA allows you to work with blocks containing from 64 to 512 threads.

Grouping blocks into grids allows you to get away from the limitations and apply the kernel to a larger number of threads in one call. It also helps with scaling. If the GPU does not have enough resources, it will execute blocks sequentially. Otherwise, the blocks can be executed in parallel, which is important for the optimal distribution of work on video chips of different levels, ranging from mobile and integrated ones.

CUDA memory model

The memory model in CUDA is distinguished by the possibility of byte addressing, support for both gather and scatter. A fairly large number of registers are available for each stream processor, up to 1024 pieces. Access to them is very fast, you can store 32-bit integers or floating point numbers in them.

Each thread has access to the following types of memory:

global memory- the largest amount of memory available for all multiprocessors on a video chip, the size ranges from 256 megabytes to 1.5 gigabytes for current solutions (and up to 4 GB for Tesla). It has a high throughput, more than 100 gigabytes / s for top Nvidia solutions, but very large delays of several hundred cycles. Not cacheable, supports generic load and store instructions, and regular memory pointers.

local memory is a small amount of memory that only one stream processor has access to. It is relatively slow - the same as the global one.

Shared memory is a 16-kilobyte (in the video chips of the current architecture) memory block with shared access for all stream processors in the multiprocessor. This memory is very fast, the same as registers. It provides thread interaction, is directly managed by the developer, and has low latency. Advantages of shared memory: use in the form of a first-level cache managed by the programmer, reducing delays in accessing data by execution units (ALUs), reducing the number of global memory accesses.

Constant memory- a 64 kilobyte memory area (the same for current GPUs), read-only by all multiprocessors. It is cached at 8 kilobytes per multiprocessor. Quite slow - a delay of several hundred cycles in the absence of the necessary data in the cache.

texture memory- a block of memory available for reading by all multiprocessors. Data sampling is carried out using the texture units of the video chip, so the possibility of linear data interpolation is provided at no additional cost. 8 kilobytes cached per multiprocessor. Slow as global - hundreds of cycles of delay in the absence of data in the cache.

Naturally, the global, local, texture, and constant memory are physically the same memory, known as the video card's local video memory. Their differences are in different caching algorithms and access models. The CPU can update and query only external memory: global, constant and texture.

From what has been written above, it is clear that CUDA implies a special approach to development, not quite the same as that adopted in programs for the CPU. You need to remember about different types of memory, that local and global memory are not cached and the delays in accessing it are much higher than for registered memory, since it is physically located in separate microcircuits.

A typical, but not mandatory, problem solving pattern:

- the task is divided into subtasks;

- the input data is divided into blocks that fit into shared memory;

- each block is processed by a thread block;

- the subblock is loaded into shared memory from the global one;

- corresponding calculations are performed on data in shared memory;

- the results are copied from shared memory back to global.

Programming environment

CUDA includes runtime libraries:

- a common part that provides built-in vector types and subsets of RTL calls supported on the CPU and GPU;

- CPU component, to control one or more GPUs;

- A GPU component that provides GPU-specific functionality.

The main process of the CUDA application runs on a generic processor (host), it runs multiple copies of the kernel processes on the video card. The code for the CPU does the following: initializes the GPU, allocates memory on the video card and system, copies the constants to the memory of the video card, runs several copies of the kernel processes on the video card, copies the result from the video memory, frees the memory and exits.

As an example for understanding, here is the CPU code for vector addition presented in CUDA:

The functions executed by the video chip have the following limitations: no recursion, no static variables inside the functions, and no variable number of arguments. Two types of memory management are supported: linear memory accessed by 32-bit pointers, and CUDA arrays accessed only through texture fetching functions.

CUDA programs can interact with graphics APIs: to render data generated in the program, to read rendering results and process them using CUDA tools (for example, when implementing post-processing filters). To do this, graphics API resources can be mapped (obtaining a resource address) into the CUDA global memory space. The following types of graphics API resources are supported: Buffer Objects (PBO / VBO) in OpenGL, Vertex buffers and textures (2D, 3D and cubemaps) Direct3D9.

CUDA application compilation steps:

CUDA C source code files are compiled using the NVCC program, which wraps other tools and calls them: cudacc, g++, cl, etc. NVCC generates: CPU code that is compiled along with the rest of the application written in pure C, and the PTX object code for the video chip. Executable files with CUDA code necessarily require the presence of the CUDA runtime library (cudart) and CUDA core library (cuda).

Optimization of programs on CUDA

Naturally, within the framework of a review article, it is impossible to consider serious optimization issues in CUDA programming. Therefore, we will just briefly talk about the basic things. To effectively use the capabilities of CUDA, you need to forget about the usual methods of writing programs for the CPU, and use those algorithms that are well parallelized for thousands of threads. It is also important to find the optimal place for storing data (registers, shared memory, etc.), minimize data transfer between the CPU and GPU, and use buffering.

In general terms, when optimizing a CUDA program, one should try to achieve an optimal balance between the size and number of blocks. More threads in a block will reduce the impact of memory latency, but will also reduce the available number of registers. In addition, a block of 512 threads is inefficient, Nvidia itself recommends using blocks of 128 or 256 threads as a compromise value to achieve optimal latency and number of registers.

Among the main points of optimization of CUDA programs: as active use of shared memory as possible, since it is much faster than the global video memory of the video card; reads and writes from global memory should be coalesced whenever possible. To do this, you need to use special data types for reading and writing 32/64/128 bits of data at once in one operation. If read operations are difficult to merge, you can try using texture fetches.

conclusions

The hardware and software architecture presented by Nvidia for calculations on CUDA video chips is well suited for solving a wide range of tasks with high parallelism. CUDA runs on a large number of Nvidia video chips, and improves the GPU programming model by greatly simplifying it and adding a lot of features such as shared memory, the ability to synchronize threads, double precision calculations, and integer operations.

CUDA is a technology available to every software developer, it can be used by any programmer who knows the C language. You just have to get used to a different programming paradigm inherent in parallel computing. But if the algorithm is, in principle, well parallelized, then the study and time spent on CUDA programming will return in a multiple size.

It is likely that due to the widespread use of video cards in the world, the development of parallel computing on the GPU will greatly affect the high performance computing industry. These possibilities have already aroused great interest in scientific circles, and not only in them. After all, the potential for accelerating algorithms that lend themselves well to parallelization (on affordable hardware, which is no less important) at once by dozens of times is not so common.

General-purpose processors develop quite slowly, they don't have such performance spikes. In fact, although it sounds too loud, everyone who needs fast computers can now get an inexpensive personal supercomputer on their desk, sometimes without even investing additional funds, since Nvidia video cards are widely used. Not to mention the efficiency gains in terms of GFLOPS/$ and GFLOPS/W that GPU manufacturers love so much.

The future of many computing is clearly in parallel algorithms, almost all new solutions and initiatives are directed in this direction. So far, however, the development of new paradigms is on initial stage, you have to manually create threads and schedule memory access, which makes things more difficult than you would normally do in programming. But CUDA technology has taken a step in the right direction, and it clearly looks like a successful solution, especially if Nvidia manages to convince developers as much as possible of its benefits and prospects.

But, of course, GPUs will not replace CPUs. In their current form, they are not designed for this. Now that video chips are gradually moving towards the CPU, becoming more and more universal (calculations with single and double precision floating point, integer calculations), so the CPUs are becoming more and more “parallel”, acquiring a large number of cores, multithreading technologies, not to mention the appearance of blocks SIMD and heterogeneous processor projects. Most likely, the GPU and CPU will simply merge in the future. It is known that many companies, including Intel and AMD, are working on similar projects. And it doesn't matter if the GPU is consumed by the CPU, or vice versa.

In the article, we mainly talked about the benefits of CUDA. But there is also a fly in the ointment. One of the few disadvantages of CUDA is its poor portability. This architecture works only on the video chips of this company, and not on all of them, but starting from the Geforce 8 and 9 series and the corresponding Quadro and Tesla. Yes, there are a lot of such solutions in the world, Nvidia gives a figure of 90 million CUDA-compatible video chips. This is just great, but competitors offer their own solutions that are different from CUDA. So, AMD has Stream Computing, Intel will have Ct in the future.

Which of the technologies will win, become widespread and live longer than the rest - only time will tell. But CUDA has a good chance, because compared to Stream Computing, for example, it provides a more developed and easy-to-use programming environment in the regular C language. Perhaps a third party will help in determining by issuing some common decision. For example, in the next DirectX update under version 11, Microsoft promised compute shaders, which can become a kind of average solution that suits everyone, or almost everyone.

Judging by the preliminary data, this new type of shader borrows a lot from the CUDA model. And by programming in this environment now, you can gain immediate benefits and the necessary skills for the future. From a high performance computing point of view, DirectX also has the distinct disadvantage of poor portability, as the API is limited to the Windows platform. However, another standard is being developed - the open multi-platform initiative OpenCL, which is supported by most companies, including Nvidia, AMD, Intel, IBM and many others.

Don't forget that in the next CUDA article, you'll explore specific practical applications of scientific and other non-graphical computing performed by developers around the world using Nvidia CUDA.

Let's go back to history - go back to 2003, when Intel and AMD were in a joint race for the most powerful processor. In just a few years, clock speeds have risen significantly as a result of this race, especially after the release of the Intel Pentium 4.

But the race was quickly approaching the limit. After a wave of huge increases in clock speeds (between 2001 and 2003, the Pentium 4 clock speed doubled from 1.5 to 3 GHz), users had to be content with tenths of a gigahertz that manufacturers were able to squeeze out (from 2003 to 2005, clock speeds increased from only 3 to 3 .8 GHz).

Even architectures optimized for high clock speeds, such as Prescott, began to experience difficulties, and this time not only production ones. Chip makers just ran into the laws of physics. Some analysts even predicted that Moore's law would cease to operate. But that did not happen. The original meaning of the law is often misrepresented, but it refers to the number of transistors on the surface of a silicon core. For a long time, an increase in the number of transistors in the CPU was accompanied by a corresponding increase in performance - which led to a distortion of the meaning. But then the situation became more complicated. The designers of the CPU architecture approached the law of gain reduction: the number of transistors that needed to be added for the desired increase in performance became more and more, leading to a dead end.

|

While CPU makers have been tearing their hair out trying to find a solution to their problems, GPU makers have continued to benefit remarkably from the benefits of Moore's Law.

Why didn't they end up in the same dead end as the designers of the CPU architecture? The reason is very simple: CPUs are designed to get the best performance on a stream of instructions that process different data (both integers and floating point numbers), perform random memory access, and so on. Until now, developers have been trying to provide greater instruction parallelism - that is, to execute as many instructions as possible in parallel. So, for example, superscalar execution appeared with the Pentium, when under certain conditions it was possible to execute two instructions per clock. The Pentium Pro received out-of-order execution of instructions, which made it possible to optimize the performance of computing units. The problem is that the parallel execution of a sequential stream of instructions has obvious limitations, so blindly increasing the number of computing units does not give a gain, since most of the time they will still be idle.

On the contrary, the work of the GPU is relatively simple. It consists of taking a group of polygons on one side and generating a group of pixels on the other. Polygons and pixels are independent of each other, so they can be processed in parallel. Thus, in the GPU, it is possible to allocate a large part of the crystal for computing units, which, unlike the CPU, will actually be used.

|

Click on the picture to enlarge.

The GPU differs from the CPU not only in this. Memory access in the GPU is very coupled - if a texel is read, then after a few cycles, the adjacent texel will be read; when a pixel is written, the neighboring one will be written after a few cycles. By intelligently organizing memory, you can get performance close to the theoretical bandwidth. This means that the GPU, unlike the CPU, does not require a huge cache, since its role is to speed up texturing operations. All it takes is a few kilobytes containing a few texels used in bilinear and trilinear filters.

|

Click on the picture to enlarge.

Long live GeForce FX!